Accelerating Transition to AI-Driven Healthcare Enterprise

The Customer takes advantage of its new MLOps Platform to accelerate, streamline, and scale the adoption of AI/ML use cases in the Consumer Health, Medical Device, and Pharmaceutical domains

Our client is a global healthcare leader based in the United States, operating in the Consumer Health, Medical Device, and Pharmaceutical domains. Established in the late 1800s, the company employs over 130,000 workers, worldwide. The company is well known in the consumer health market, with an ongoing commitment to innovation, social responsibility, and improving global health.

Challenge

Our client was looking to accelerate and scale the adoption of AI/ML across its organization by adopting and implementing a modern MLOps platform. Provectus, an AI-consulting company with competencies in Machine Learning and Data & Analytics, was chosen as a strategic partner to assist the client’s engineering team in building and scaling the platform, and in implementing and productionalizing a selection of AI/ML applications.

Solution

Provectus used its MLOps Platform as a foundation for the client’s solution. An AI/ML application was implemented and selected for productionalization on the MLOps Platform. The AI/ML project template, which included the components for experimentation, an ML model training and inference, and CI/CD, was prepared as a blueprint for future projects. Provectus provided comprehensive documentation for the client to onboard its data scientists and ML engineers.

Outcome

Provectus implemented a cloud-native MLOps platform on AWS that enabled the client’s data scientists and ML engineers to iterate quickly and reliably, from concept to production deployment of AI/ML applications. By using its new MLOps Platform, the client was able to optimize and streamline the development and deployment of AI/ML, organization-wide and at scale.

A new, cloud-native MLOps Platform on AWS

Quick and easy onboarding of Data Scientists and ML Engineers

Enhanced and streamlined ML lifecycle

Scaling Enterprise AI/ML for Business Value: From Data to Insights

Our client is a diversified global company operating in the healthcare sector, with a significant global presence. The organization’s operations consist of three primary segments: pharmaceuticals, medical devices, and consumer health products.

Like many enterprises, the company generates substantial amounts of data from various sources, including customer interactions, sales transactions, social media activity, and product usage. AI/ML applications are able to transform this data into actionable insights. However, without a robust Machine Learning Operations (MLOps) platform, it would be a challenge for the organization to effectively scale and manage their AI/ML workflows, resulting in inefficiencies, increased costs, and slower time-to-market for new products.

The client’s data scientists and ML engineers were looking for ways to simplify the deployment of AI/ML into production environments, particularly when using MLOps practices and the Amazon SageMaker suite of services.

The client was transitioning from legacy infrastructure, but its engineers could not access and discover the unified and integrated workloads quickly and efficiently enough to meet the company’s vision for AI transformation. Despite existing AI/ML projects, the client did not have a standardized platform for production deployment of AI/ML applications. Their first goal was to simplify learning and onboarding of ML engineers while reducing the involvement of DevOps professionals.

By standardizing its AI/ML toolkit and introducing MLOps best practices on Amazon SageMaker, the client’s ML engineering team wanted to:

- Improve productivity

- Cut overhead and maintenance costs

- Increase end user satisfaction

Provectus, an AWS Premier Consulting Partner, joined forces with the client’s APAC consumer health products subdivision in 2021. Both teams worked cohesively to achieve the goals outlined in accordance with the client’s broader AI transformation vision.

MLOps Implementation: From Specific AI Use Cases to an At-Scale Platform Solution

The Provectus team began AI transformation with the implementation of an MLOps platform and the development of an AI/ML solution for its next purchase prediction. This approach enabled us to showcase the platform’s features and benefits in a real-world scenario.

Provectus agreed to build an MLOps platform and deliver an AI/ML application, and to create an AI/ML project template that could be easily deployed on the MLOps platform and serve as a foundation for future AI/ML solutions.

Amazon SageMaker offers a comprehensive suite of services, including Amazon SageMaker Projects, which was utilized in the client’s MLOps project.

Amazon SageMaker Projects is an abstraction that helps to organize and standardize AI/ML projects, from conducting experiments to production-ready model deployment in a single data science-oriented IDE — Amazon SageMaker Studio. It provides a central location to manage all resources needed for AI/ML projects, including source code, experiments, pipelines, model registry, and endpoints. With SageMaker Projects, a custom template can be used to set up standard ML infrastructure that incorporates best practices. It can then be shared and re-used across multiple use cases, and integrated into the client’s existing tools. SageMaker Projects also helps to streamline the lifecycle of ML projects and enhance collaboration across teams.

From a technical standpoint, the Provectus solution consists of two main components:

- A SageMaker Project infrastructure template

- An AI/ML project template (seed code)

The SageMaker Project infrastructure template is a blueprint for every new use case to be designed and built on Amazon SageMaker. It includes the required IaaC infrastructure, the seed code, and CI/CD that serves as a blueprint.

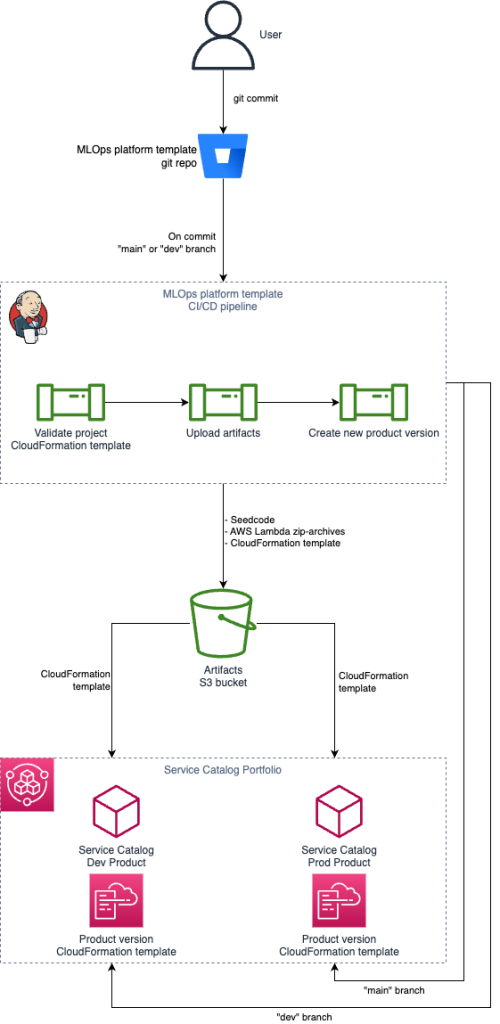

Certain service instances are predefined to ensure an operational workflow. These include a template source code repository in Bitbucket, a Jenkins CI/CD pipeline for the repository, and an Amazon S3 bucket for storing artifacts (seed code, source code for AWS Lambda functions, and CloudFormation templates). Additionally, a Service Catalog portfolio includes two products (one each for the development and production environment), along with multiple product versions.

The objective at this stage was to provide the SageMaker Project template to end users, enabling them to easily create new SageMaker Projects. Upon each commit to the ‘main’ or ‘dev’ branches, a Jenkins pipeline is triggered to perform several actions:

- Validate the CloudFormation template

- Upload necessary artifacts to the Amazon S3 bucket

- Create a new version of the Service Catalog product

If a commit originates from the ‘main’ branch, a new production version is created; for other branches, a new development version is created.

Another crucial component of the platform is the seed code. Acting as an example project, the seed code illustrates how a specific AI/ML application can be implemented and onboarded onto the MLOps platform.

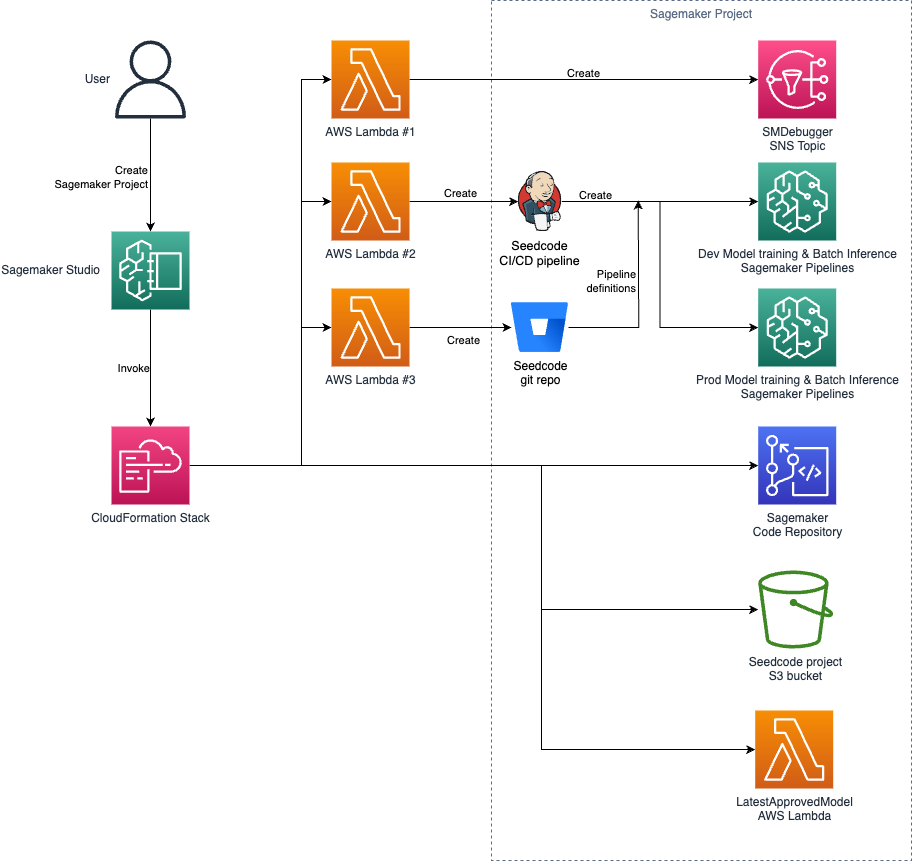

When an end user initiates a blank SageMaker Project using the seed code as a starting point for implementation of an AI/ML-based project, a CloudFormation stack is created based on the CloudFormation template, and all related infrastructure units are provisioned. Specifically, a Bitbucket repository is created and the seed code is committed, triggering a Jenkins CI/CD pipeline. This pipeline then establishes the Amazon SageMaker training and batch inference pipelines. Following this setup, the seed code is ready for use. Understandably, the default seed code implementation may not cover all AI use cases, and modifications may be necessary.

To address a client-imposed limitation — namely, the provision of a single AWS account, making the proper separation of development, test, and production environments impossible — the solution architecture was adapted to benefit from the given requirements. Provectus chose a per Git branch environment strategy, which means that the “main” branch contained the production environment code, while all other branches served as development or test environments. This strategy applied to both platform templates and seed code.

Another limitation involved a prohibition on using custom Docker images for data preprocessing and model training in Amazon SageMaker pipelines. Provectus successfully mitigated this problem by utilizing prebuilt SageMaker ML Docker images for Scikit-Learn and XGBoost.

As an integral part of the project, Provectus produced comprehensive documentation that encompassed descriptions of the constructed MLOps platform and seed code, as well as instructions for their deployment and maintenance. This documentation is readily accessible to the client’s Data Scientists and ML Engineers.

Enabling At-Scale Productionalization of AI Use Cases on MLOps Platform

With assistance from Provectus, the client was able to start using their new MLOps platform in a matter of months, to optimize and streamline the operationalization of AI/ML projects.

The MLOps platform and accompanying templates offered the client’s ML engineering team a convenient foundation for initiating the productionalization of new AI/ML projects and onboarding existing AI initiatives.

The introduction of these innovations significantly accelerated the time-to-market value of the client’s AI/ML projects:

- The amount of manual work required to set up infrastructure for AI/ML projects was significantly reduced or nearly eliminated.

- The costs of Proof of Concept development were reduced by minimizing the need for additional personnel such as software and DevOps engineers.

- The infrastructure approval process for AI/ML project development was expedited due to the standardization provided by the MLOps platform.

- The seed code provided a robust starting point for all subsequent AI applications for the client’s ML Engineers.

The comprehensive documentation of the MLOps platform, seed code implementation and deployment, as well as AI/ML project onboarding guides prepared by Provectus, significantly reduced the entry barrier to understanding existing solutions and the development of new AI/ML solutions and projects.

These deliverables from Provectus have empowered the client to develop and deploy future AI/ML applications more rapidly, efficiently, and at a larger scale. The delivered solution facilitates data-driven decision-making, leading to greater business success and a competitive advantage in the market.

Moving Forward

- Learn more about our MLOps Platform and ML Infrastructure

- Explore more customer success stories covering MLOps: Appen, GoCheck Kids, VTS, Lane Health

- Apply for Machine Learning Infrastructure Acceleration Program, to get started

contact us!

Looking to explore the solution?