December 12, 2024

14 min read

Provectus at AWS re:Invent 2024: Powering Business Transformation with Generative AI

Author:

Provectus, AI-first consultancy and solutions provider

AWS re:Invent 2024 brought together over 60,000 attendees in Las Vegas, and 400,000 virtual participants from around the globe. With 1,900 sessions and 3,500 speakers, this year’s event focused on the convergence of Big Data, Analytics, Artificial Intelligence (AI), Machine Learning (ML), and Generative AI (GenAI) in the Cloud — showcasing how these technologies are transforming industries and driving innovation for businesses.

As an AI-first consulting firm and AWS Premier Consulting Partner, Provectus was excited to attend re:Invent 2024. This year, we focused on learning from the brightest minds in the industry, building connections, and exploring the latest trends shaping the future of technology.

In today’s era of disruption, Provectus is committed to helping businesses stay ahead. From advanced GenAI solutions to unified frontier systems that merge AI/ML/GenAI, Data, and Analytics, we are excited to bring the transformative power of these innovations, already available on AWS, to our customers.

Below is a recap of Provectus’ learnings at AWS re:Invent, including the keynotes and sessions we attended, and our insights into the latest industry trends and announcements from AWS.

Overview of re:Invent ‘24

AWS re:Invent is one of the world’s premier technology events, bringing together innovators, creators, and learners to fuel creativity, ignite new projects, and scale cutting-edge solutions.

This year, AWS re:Invent 2024 was touted as the largest event in its 12-year history, and the driving force behind its monumental growth is generative AI. Competition among tech giants and startups to deliver enterprise-grade GenAI tools has intensified, as focus shifts to this transformative technology.

However, the 2024 conference was not just about GenAI. AWS strategically refocused the conversation to spark a deeper exploration of the convergence between Data, AI/ML/GenAI, and Analytics. This convergence has the potential to enable businesses of all sizes to harness frontier solutions that can drive innovation and deliver value at unprecedented scale and speed.

This shift was evident throughout the event, with keynotes, leadership sessions, and deep-dive sessions all highlighting how these technologies intersect, and the immense potential they offer to businesses looking to stay ahead of the curve.

As always, keynotes were the cornerstone of re:Invent, featuring five stellar presenters:

- Keynote with Peter DeSantis

- Keynote with Matt Garman

- Keynote with Dr. Swami Sivasubramanian

- Keynote with Ruba Borno

- Keynote with Dr. Werner Vogels

We highly recommend watching all five keynotes, but if your time is limited, make sure to catch keynotes #2, #3, and #5. Here is why:

- In his keynote, AWS CEO Matt Garman discussed how AWS is driving innovation across every aspect of the world’s leading cloud, at the intersection of Data, AI/ML/GenAI, and Analytics. The key takeaway is that it is no longer enough to simply make AI models that perform impressive tasks; moving practical solutions to production, cost-efficiently and at scale is now crucial. To address this, AWS is updating its suite of services and releasing new tools, features, and foundation models (over 100 models in total). Their strategy is to offer users more choice, as there is no one-size-fits-all model (or tool) for every task.

- Dr. Swami Sivasubramanian continued to explore this convergence. He emphasized that as disruption becomes the new normal, AWS’ goal is to equip its partners and customers with a full suite of services needed to support the entire AI use case lifecycle — not just development and deployment, but also ideation, maintenance, and future adjustments. He reinforced this message with updates for Amazon SageMaker, Amazon Bedrock, Amazon Knowledge Bases, Amazon Kendra, Amazon Q Developer, and more.

- In his keynote, Dr. Werner Vogels reflected on his 20-year career at AWS, discussing how the company has consistently innovated to address complex customer challenges. He acknowledged that complexity is inevitable as systems grow, but it often signals added functionality. Vogels shared six key lessons on managing complexity, or “simplexity,” which he has learned throughout his time at Amazon.

Aligning with the event’s central theme — the convergence of Data, AI/ML, GenAI, and Analytics — AWS introduced several new services and announced major enhancements to its existing offerings.

Next Generation of Chips and Instances

Building a robust “hardware” foundation for any Data/AI/Analytics task, Matt Garman announced the launch of new Amazon EC2 instances, powered by Nvidia’s latest Blackwell GPUs and AWS’s Trainium chips. The three new EC2 offerings include:

- P6 Instances: Featuring Nvidia Blackwell GPUs, these will launch in 2025, offering up to 2.5x faster compute compared to current GPUs.

- Trn2 Instances: Now generally available, they provide 30–40% better performance than current GPUs, with 16 Trainium2 chips connected via NeuronLink, delivering 20.8 petaflops.

- Trn2 UltraServers: Comprising four Trn2 instances, these include 64 Trainium2 chips and deliver up to 83.2 FP8 petaflops.

Garman unveiled Project Rainer, a collaboration with Anthropic to build a massive cluster of Trainium2 UltraServers, featuring hundreds of thousands of Trainium chips connected by high-speed, petabit-scale networking.

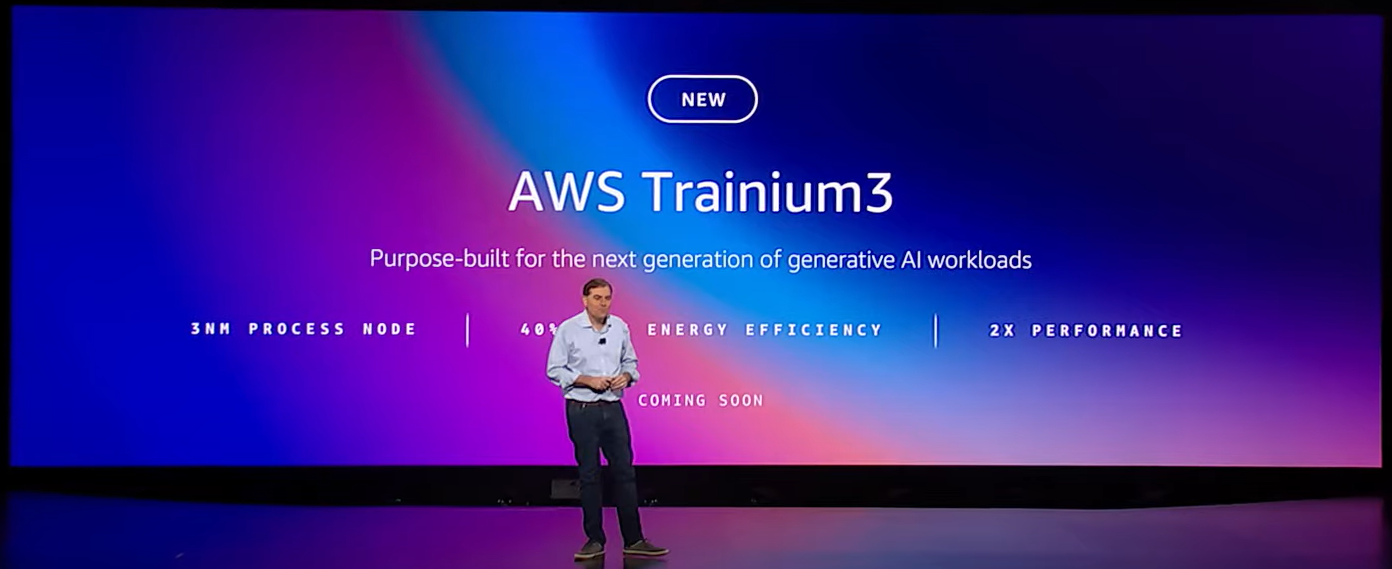

Most notably, Garman announced the release of Trainium3. This next-generation AI chip is designed to enable customers to build larger GenAI models more quickly, and deliver superior real-time performance. Trainium3 UltraServers are expected to offer four times the performance of Trn2 UltraServers. The first Trainium3-based instances are to be released in late 2025.

New Services for Data, Storage, Management, and Governance

The evolution of Amazon S3 continues.

- Amazon S3 Tables: Optimized for tabular data in the Apache Iceberg format, Amazon S3 Tables deliver up to 3x faster query performance and 10x more transactions per second compared to self-managed storage. Fully managed, they integrate seamlessly with tools like Amazon Athena, Amazon EMR, and Apache Spark for efficient and scalable data queries.

- Amazon S3 Metadata: Available in preview, Amazon S3 Metadata enables automatic metadata generation for Amazon S3 objects, stored in fully managed Apache Iceberg tables. It allows customers to efficiently query metadata using Iceberg-compatible tools, simplifying the process of locating objects at any scale. It enhances analytics, data processing, and AI training workflows by making relevant data easier to find.

- Amazon Aurora DSQL: A serverless distributed SQL database offering superb scalability, Amazon Aurora DSQL eliminates infrastructure management, handles scaling without sharding, and supports PostgreSQL compatibility for seamless application development. Ideal for high-availability workloads, it simplifies operations with easy setup and maintenance-free management.

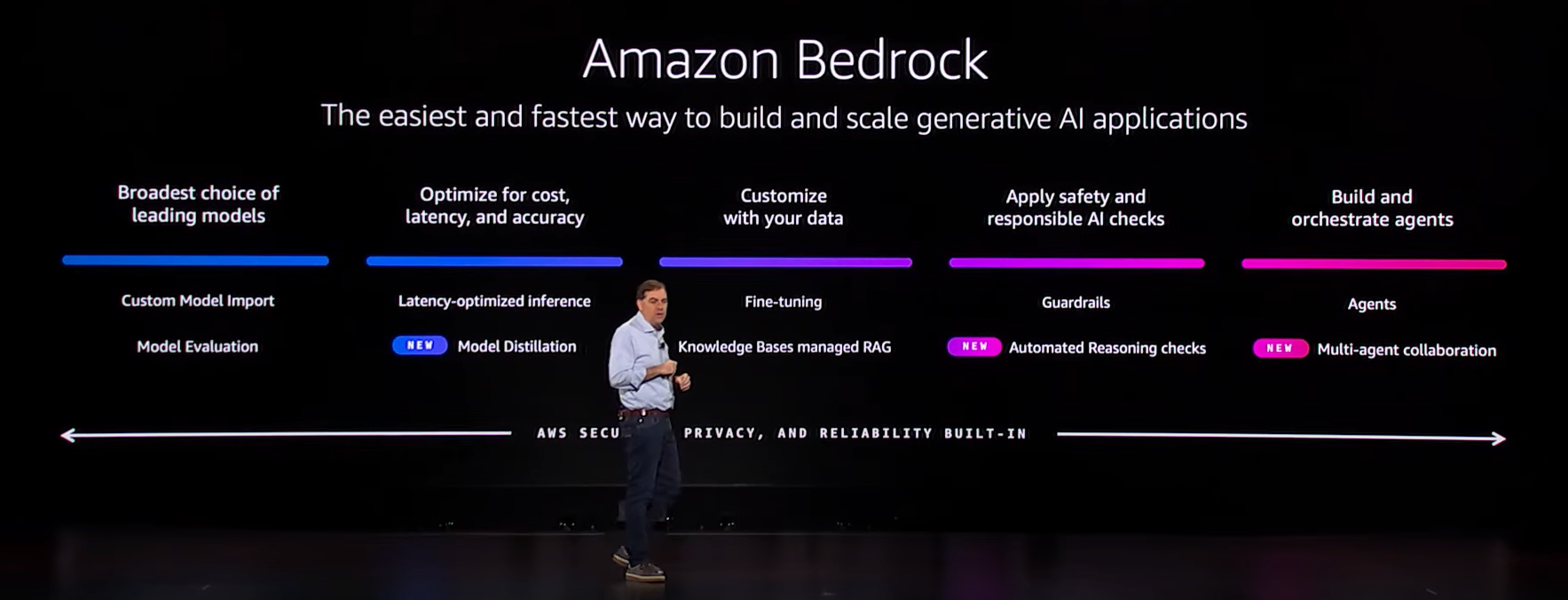

Amazon Bedrock: New Features and Enhancements

AWS envisions generative AI becoming an integral part of every application in the future and is focused on making this transformative technology accessible to innovators.

To support this vision, AWS has introduced numerous updates and enhancements to Amazon Bedrock, a fully managed service that simplifies building GenAI applications. Bedrock provides access to high-performing foundation models (FMs) from leading AI companies, including AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon. Upcoming additions, such as models from Luma and Poolside, further expand its offerings.

Amazon Bedrock has seen remarkable growth in 2024, achieving 5x expansion and attracting tens of thousands of customers. This growth is fueled by its diverse selection of foundation models (FMs), offering unmatched flexibility for generative AI applications.

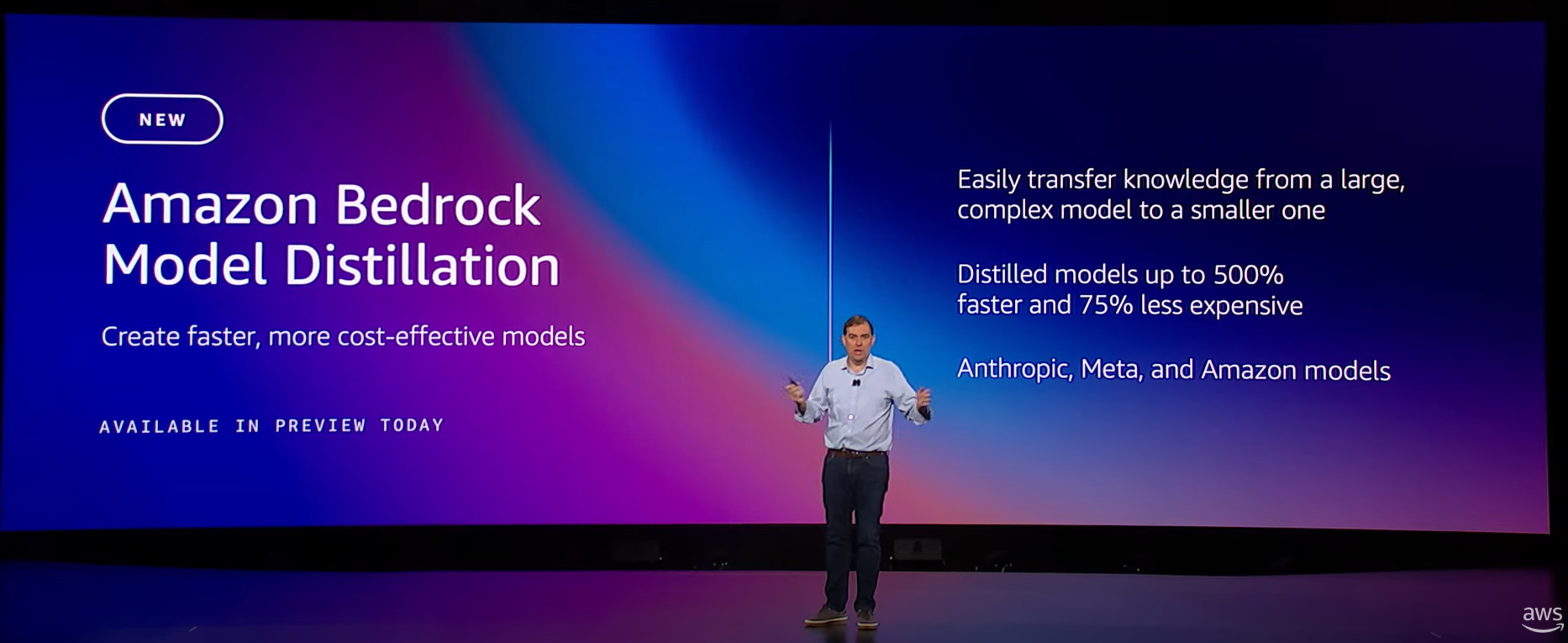

However, choosing the right model remains challenging. AWS has introduced Amazon Bedrock Model Distillation, now available in preview. This feature automates the process of creating smaller, cost-efficient models tailored to specific use cases, achieving up to five times faster performance and 75% cost savings with minimal accuracy loss. By leveraging enterprise data and Retrieval Augmented Generation (RAG), Bedrock empowers businesses to scale GenAI applications efficiently, focusing on production-grade performance and real-world value.

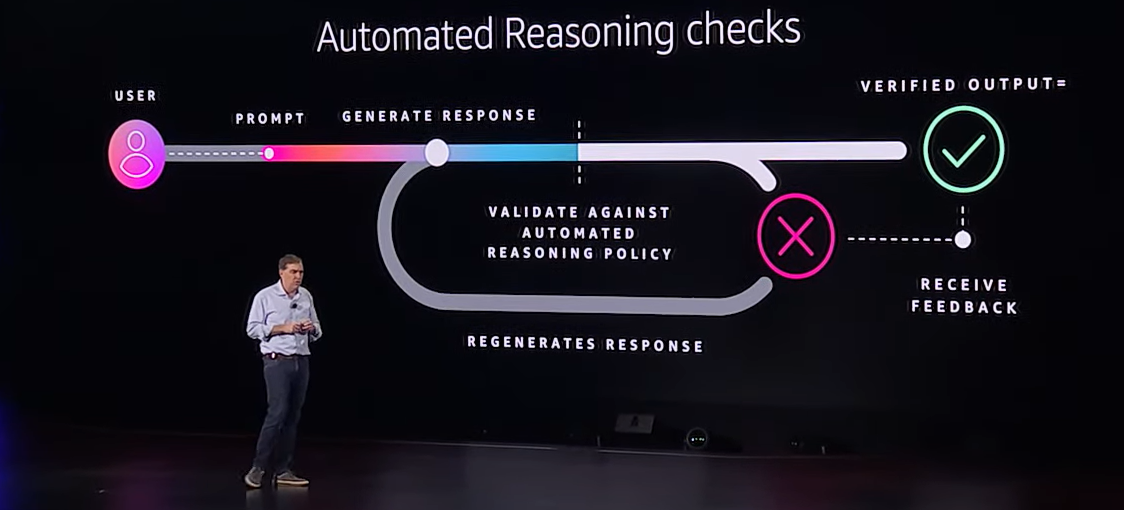

To address AI hallucinations and output errors, AWS has introduced Amazon Bedrock Automated Reasoning checks (in preview) as part of the Amazon Bedrock Guardrails suite.

These checks use mathematical and logic-based verification to validate large language model (LLM) responses, ensuring accuracy and reducing factual inaccuracies. Amazon Bedrock Guardrails also supports safeguards like content filtering, PII redaction, and contextual grounding, helping businesses enhance the safety and reliability of their GenAI applications.

Managing agents for complex, multi-step tasks often requires multiple specialized agents, which can be technically challenging. AWS has introduced the multi-agent collaboration capability for Amazon Bedrock (preview) — a fully managed solution to streamline the management of multiple GenAI agents working together on complex, multi-step tasks. A supervisor agent orchestrates the process by delegating tasks to specialized agents and consolidating their outputs into a final response.

This capability eliminates the need for manual management of agent orchestration, session handling, and memory processes, enabling developers to focus on outcomes. Internal benchmarks reveal significant improvements in task success rates, accuracy, and productivity compared to single-agent systems.

In addition, AWS made several announcements highlighting its commitment to balancing cost and latency, improving intelligence, and ensuring security in GenAI applications.

Amazon Bedrock Intelligent Prompt Routing optimizes cost, latency, and response quality, delivering up to 30% savings for customers. Amazon Bedrock Data Automation, a service designed to handle unstructured, multi-modal data, enables the development of more contextually aware and smart GenAI applications. Amazon Bedrock Guardrails, with multimodal toxicity detection, extends safeguards to image content, to ensure safer and more responsible AI-generated outputs.

Amazon Nova Models and Amazon Bedrock Marketplace

Amazon CEO Andy Jassy was excited to announce Amazon Nova, a new generation of state-of-the-art foundation models (FMs) designed to deliver frontier intelligence with industry-leading price performance, because “model choice matters.”

With Amazon Nova, you can significantly reduce costs and latency — up to 75% more cost-effective than other models on Bedrock — while achieving fast, efficient, and accurate results. Even the smallest Nova model delivers competitive performance against industry alternatives. Nova’s deep integration with Bedrock’s knowledge bases enhances its intelligence and flexibility for enterprise workloads.

The Amazon Nova family includes four powerful foundation models tailored for diverse generative AI use cases:

- Amazon Nova Micro: A cost-effective, text-only model delivering ultra-low latency for tasks like summarization, translation, classification, and coding. With a 128K token context length, it supports customization through fine-tuning and model distillation.

- Amazon Nova Lite: A fast, low-cost multimodal model for processing image, video, and text inputs to generate text outputs. Designed for real-time customer interactions and document analysis, it supports inputs of up to 300K tokens, including 30-minute videos, and offers customization via fine-tuning and model distillation.

- Amazon Nova Pro: A highly capable multimodal model offering a balance of accuracy, speed, and cost for complex workflows, such as API calling and financial document analysis. With a 300K token context length, it excels in tasks requiring deep understanding of visual and textual information, setting benchmarks in areas like video understanding and visual Q&A. It also serves as a teacher model for distilling variants of Micro and Lite.

- Amazon Nova Premier: The most advanced multimodal model, optimized for complex reasoning, and as a superior teacher for custom model distillation. Currently in training; coming early 2025.

AWS plans to continuously expand Nova’s capabilities. Upcoming releases include Nova Speech-to-Speech and Nova Any-to-Any, which will support seamless input/output flexibility across modalities.

With Amazon Nova, AWS is committed to offering users choices, because there is no one-size-fits-all model for GenAI. Whether you’re creating AI-driven assistants, crafting multimedia content, or processing complex data, Nova empowers businesses to innovate at scale with cost efficiency, speed, and accuracy.

In addition, AWS continues to expand its collaboration with other foundation model (FM) providers through the introduction of Amazon Bedrock Marketplace. This new capability offers users access to over 100 popular, emerging, and specialized FMs via Amazon Bedrock. With this launch, users can now easily discover, test, and deploy models from enterprise providers like IBM and Nvidia, specialized models such as Upstage’s Solar Pro for Korean language processing, and Evolutionary Scale’s ESM3 for protein research, as well as general-purpose FMs from providers like Anthropic and Meta.

Next Generation of Amazon SageMaker and Unified Studio

The convergence of Big Data, Analytics, AI/ML, and GenAI in the Cloud has created a need for AWS to deliver an integrated experience for builders. As part of this effort, Amazon SageMaker has undergone a significant rework (over 140 new capabilities added since 2023) to meet evolving demands.

Now, AWS positions Amazon SageMaker as a central hub that offers a comprehensive suite of tools for data exploration, preparation, integration, big data processing, fast SQL analytics, machine learning (ML) model development, and generative AI application development.

The SageMaker itself has been rebranded as Amazon SageMaker AI. This service is now integrated into the new SageMaker ecosystem while remaining available as a standalone solution for users focused solely on building, training, and deploying AI and ML models at scale.

Central to this transformation is SageMaker Unified Studio (preview), a consolidated development environment that combines the functionality of various standalone AWS tools, including Amazon Athena, Amazon EMR, AWS Glue, Amazon Redshift, Amazon Managed Workflows for Apache Airflow (MWAA), and the current SageMaker Studio. It also integrates Amazon Bedrock IDE (preview), an upgraded version of Amazon Bedrock Studio, to streamline the development of generative AI applications. Additionally, Amazon Q offers AI-powered assistance across workflows, enhancing productivity.

Core Capabilities of Next-Generation Amazon Sagemaker:

- SageMaker Unified Studio (preview) – A single environment for building with all your data and tools for analytics and AI.

- Amazon SageMaker Lakehouse – Seamlessly unify data across Amazon S3 data lakes, Amazon Redshift data warehouses, and federated or third-party data sources.

- Data and AI Governance – Discover, govern, and collaborate securely on data and AI using Amazon SageMaker Catalog, built on Amazon DataZone.

- Data Processing – Analyze, prepare, and integrate data for analytics and AI with open-source frameworks on Amazon Athena, Amazon EMR, and AWS Glue.

- Model Development – Build, train, and deploy ML and foundation models (FMs) with fully managed infrastructure and workflows using Amazon SageMaker AI.

- Generative AI Application Development – Develop and scale generative AI applications with Amazon Bedrock.

- SQL Analytics – Unlock insights using Amazon Redshift, the most cost-efficient SQL engine on the market.

In addition to the above, AWS has also revealed Amazon SageMaker HyperPod flexible training plans, a capability that unlocks efficient large model training, allowing users to find optimal compute resources and complete training within timelines and budgets. Amazon SageMaker HyperPod task governance also enables priority-based resource allocation, fair-share utilization, and automated task preemption for optimal compute utilization across teams. Amazon SageMaker HyperPod recipes allows users to easily start training and fine-tuning popular publicly available FMs in just minutes.

This revamped SageMaker delivers a unified, end-to-end solution for businesses looking to unlock the full potential of their Data and AI initiatives.

New Capabilities of Amazon Q

Last year at AWS re:Invent, AWS introduced Amazon Q Developer, a generative AI–powered assistant created to help developers design, build, test, deploy, and maintain software across integrated development environments (IDEs).

This year, AWS is expanding Amazon Q Developer’s capabilities to fully leverage the power of generative AI. Some of these include:

- Enhanced Documentation: Automatically generate and improve documentation within codebases.

- Code Review Support: Detect and resolve security vulnerabilities and code quality issues more effectively.

- Automated Unit Test Generation: Create unit tests and improve test coverage throughout the software development lifecycle.

Other enhancements to Amazon Q Developer include:

- .NET Transformation in IDE (preview) — Streamline modernization of .NET applications by porting them from Windows to cross-platform .NET directly within familiar IDEs.

- Web-Based Transformation (preview) — Use a unified web experience to accelerate large-scale transformations, including .NET porting, mainframe modernization, and VMware migration, with generative AI agents supervised by teams.

- Operational Issue Remediation (preview) — Investigate and resolve operational issues directly within the AWS Management Console, enabling faster troubleshooting for operators of all skill levels.

- GitLab Duo with Amazon Q — Enhance software development workflows by embedding advanced AI agent capabilities into GitLab, optimizing collaboration across tasks and teams.

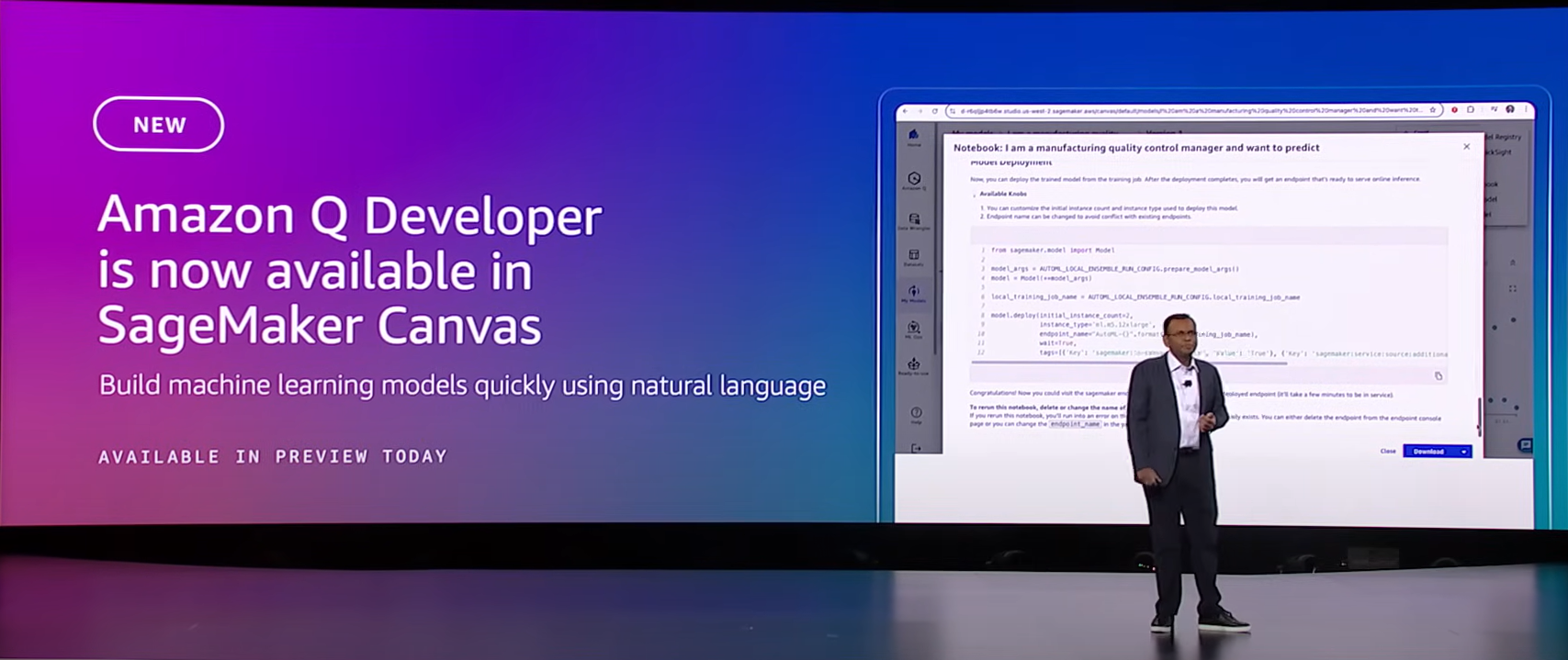

Most importantly, Amazon Q Developer is now integrated into Amazon SageMaker Canvas, bridging the gap between ML expertise and business needs. It enables domain experts who lack ML knowledge to create accurate, production-ready ML models using natural language interactions. Amazon Q Developer simplifies workflows by breaking down business problems, analyzing data, removing anomalies, and guiding users through building and evaluating custom ML models. It ensures transparency and user control at every step, accelerating innovation, reducing time to market, and freeing ML experts to focus on complex technical challenges.

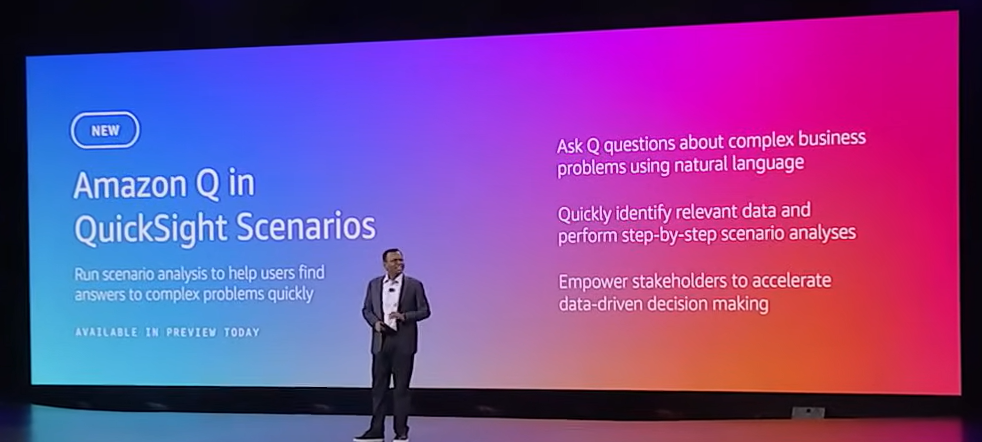

AWS also announced a new Amazon Q capability in QuickSight, enabling business users to perform scenario analysis up to 10x faster than spreadsheets. This AI-assisted experience guides users through complex data analysis with natural language prompts, automatically analyzing data, suggesting approaches, summarizing findings, and recommending actions. It eliminates the tedious manual work traditionally needed for such tasks.

Integrated into QuickSight, this capability leverages data from sources like Amazon Athena, Redshift, S3, and more, while also supporting .csv and .xlsx file uploads. It builds on QuickSight’s data Q&A features, empowering business professionals to streamline analysis and decision-making.

Embracing the Future at the Intersection of Data, AI/ML/GenAI, and Analytics

AWS re:Invent 2024 demonstrated a critical shift in enterprise technology: the convergence of Data, AI/ML/GenAI, and Analytics in the Cloud. The event highlighted that modern businesses need more than impressive technological demos — they require practical, scalable solutions that can be efficiently deployed to deliver real value across their organizations.

Key innovations like Amazon Nova models, enhanced Amazon Bedrock capabilities, and the reimagined Amazon SageMaker showcase AWS’ commitment to making advanced technologies at the intersection of Data, AI, and Analytics more accessible. The focus has moved beyond mere technological potential to practical implementation — delivering faster compute, more efficient and highly specialized foundation models, and integrated environments for data management, solution development and deployment, and BI & Analytics.

For Provectus, these announcements represent exciting opportunities to help clients navigate the rapidly evolving technological landscape. The future belongs to organizations that can effectively integrate AI, Data Analytics, and Cloud technologies to solve complex business challenges. We are ready to help our clients thrive.

.png)