July 5, 2023

10 min read

An Instruction-following GPT-J Model Based on Instructions Generated by Bloom

Author:

Bogdan Ivashchenko & Rinat Gareev, ML Engineer & Senior Solutions Architect at Provectus

This article explores the challenges associated with accessing and utilizing large language models (LLMs) for natural language processing (NLP) applications, such as chatbots and text generators.

Today, advanced models (like those powering ChatGPT) are available for everyday use, but for many organizations, existing models cannot be practically applied and implemented for business use cases due to their significant computing demands and funding restraints.

To address this issue, we propose the deployment of smaller, more affordable LLMs utilizing open-source models such as GPT-J. We describe a self-instruct framework that can improve the ability of smaller LLMs to follow natural language instructions, without the need for extensive manual annotation. Then, we introduce a variant of LLM that users can experiment with and fine-tune, using small datasets tailored to their specific requirements.

The proposed approach aims to democratize access to complex intelligent systems, powered by Generative AI and LLMs, for both individuals and businesses.

Introduction

The field of language modeling has made significant advancements in recent years. Pre-trained and customized large language models, such as those used in ChatGPT, have achieved impressive results across a wide range of natural language processing tasks.

One of the most popular cases using the capabilities of these models is the development of conversational interfaces or chatbots. Their application in such use cases as text generation, text translation, and other text-related tasks is also common.

OpenAI language models, accessible via an API, are a popular choice for developing such solutions. However, they may not always meet specific requirements. For instance, when creating a chatbot to discuss medical issues with customers, the challenge is sensitive data that cannot be accessed by third parties. Or, assistant applications with high request volumes may frequently access the model, and using a private infrastructure may be more cost-effective than relying on a third-party service. In addition, services like OpenAI enforce their own usage policies, potentially limiting a project’s scope.

To get around these obstacles, open-source models provide an effective alternative. The rise of open-source language models is already underway, with examples such as Dolly, Open Assistant, Red Pajama, and StableLM. In the following sections, we explore the process of choosing, adapting, and using of a custom LLM.

Development Approach

The development of a custom LLM includes the following steps:

- Choose an open-source pre-trained LLM that fits all requirements

- Adapt the LLM for a specific use case and domain

- Create a dataset to fine-tune the model

- Fine-tune the model

- Use the LLM for the chosen use case

- Deploy the model

- Run inference for required tasks

The following sections provide an overview of each of these steps in more detail.

Choosing a Base Model

Any language model can be pre-trained or aligned for specific tasks. Base pre-trained LLMs like GPT-3 are language models trained on a large corpus of text, with the goal of learning general language patterns and structures. These models are not tailored to any specific task or domain and can be fine-tuned for various tasks. Aligned pre-trained models like ChatGPT are pre-trained specifically for a particular task or domain. For example, ChatGPT is pre-trained on conversational data, and is designed to respond to natural language queries (prompts).

As we previously mentioned, significant resource demands can pose a barrier to the adoption of LLMs. Also, the results produced by these models often cannot be used for commercial purposes due to licensing restrictions. It is also worth noting that there is a correlation between the size of the model and the quality of its results: the larger the model, the better able it is to learn language patterns.

In this article, we use GPT-J, a GPT-2-like causal language model trained on the Pile dataset from EleutherAI. It is open-source and easy to deploy, making it a good candidate for experimentation.

Adapting the Model

Two primary methods for tailoring a model to a specific task are prompt engineering and model fine-tuning.

Prompt engineering simply involves crafting an appropriate query for the model. For instance, to prompt a pre-trained model to answer a question, several valid questions and answers can be included, along with the main query.

Model fine-tuning, on the other hand, requires the creation of a dataset and retraining of the model. While this method requires more resources than prompt engineering, it typically delivers superior performance.

In the context of this article, prompt engineering is applied to create a dataset, which is subsequently used to fine-tune the model.

We will fine-tune the model to follow specific instructions. This process allows users to interactively prompt the model to solve tasks, because models are trained to follow instructions rather than merely augment text. There is a growing interest in methods for training smaller LLMs to function similarly to their larger instruction-following counterparts, such as Chat-GPT. One way to achieve this is by using self-instruct approaches. The Self-Instruct framework enables language models to better follow natural language instructions. This is accomplished by using the model’s own outputs to generate an extensive collection of instructional data.

— Creating a Dataset

There are several approaches to create a dataset.

The first is to create a dataset manually, and then to write out all possible questions and answers for a specific use case. But training of such a model requires a large amount of data, from thousands to hundreds of thousands of lines. This option requires a lot of effort.

The second option is to give a larger and better language model the task of generating the required amount of data from a smaller number of examples. While this saves time, the quality of such data may be lower than in the manual method. In this article, we opted to use BLOOM, one of the largest open-source language models, because it allows for generating more precise instructions.

— Fine-tuning the Model

During fine-tuning, the parameters of the pre-trained model are adjusted to better fit the target task or domain, resulting in improved performance and better language understanding. For fine-tuning, we used DeepSpeed library and AdamW optimizer.

Implementation Details

First, we deployed the Bloom 176B model using Amazon SageMaker. Then, approximately 50,000 instructions were generated and utilized to fine-tune a smaller GPT-J model. These models can be implemented for training/inference via several methods: locally, leveraging cloud infrastructure, or through public APIs (only for inference). Finally, we validated the results of the smaller model against ChatGPT to ensure optimal performance. By adhering to these steps, we achieved our original goal — to develop a potent yet accessible LLM that meets the requirements of both individual users and businesses.

Generating an Instruction Dataset

To generate instructions, we utilized the LLaMA code. It was modified to employ BLOOM instead of GPT-3. Some instructions were produced using a proprietary cloud solution, while others were generated via a public API.

— Deploying BLOOM Locally

Storing a BLOOM checkpoint requires 330 GB of disk space, yet it can be run on a computer with a minimum of 16GB of RAM, provided sufficient disk space is available.

BLOOM is a causal language model trained as a next-token predictor. It is adept at connecting multiple concepts within a sentence and can accurately solve non-trivial problems like arithmetic, programming, and translation. In this article, we used the Hugging Face transformer library, complemented by custom Python code, to load model weights from the disk and generate a sequence of tokens. This library offers the BLOOM architecture, which consists of 70 transformer blocks with self-attention and multi-layer perceptron layers, an input embeddings layer, and an output language-modeling layer.

To download a pre-trained BLOOM checkpoint, it can be cloned from the Hugging Face models repository, which contains 72 different sharded files. The downloaded files include word embeddings and an associated layer norm, the 70 BLOOM blocks, and the final layer norm.

To run BLOOM, the input tokens need to be passed through each of the 70 BLOOM blocks, and as it is a sequential operation, only one block can be loaded into the RAM at a time.

— Using a Public BLOOM API

One option for utilizing the BLOOM model involves accessing a public API. This simply requires registration on Hugging Face, acquiring a token, and starting usage. Model parameters can be modified via additional parameters. However, this method comes with limitations such as potential service unavailability due to server load or restrictions on API usage.

— Selecting Parameters for BLOOM

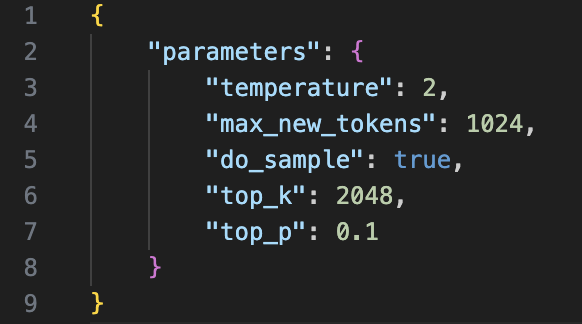

Ensuring the model generates appropriate instructions requires the model to not solely select the most likely phrases, but also to avoid choosing a continuation that is significantly out of context. Through strict experimentation, we identified the following parameters for usage.

Here is an example of a prompt that can be used to generate this instruction.

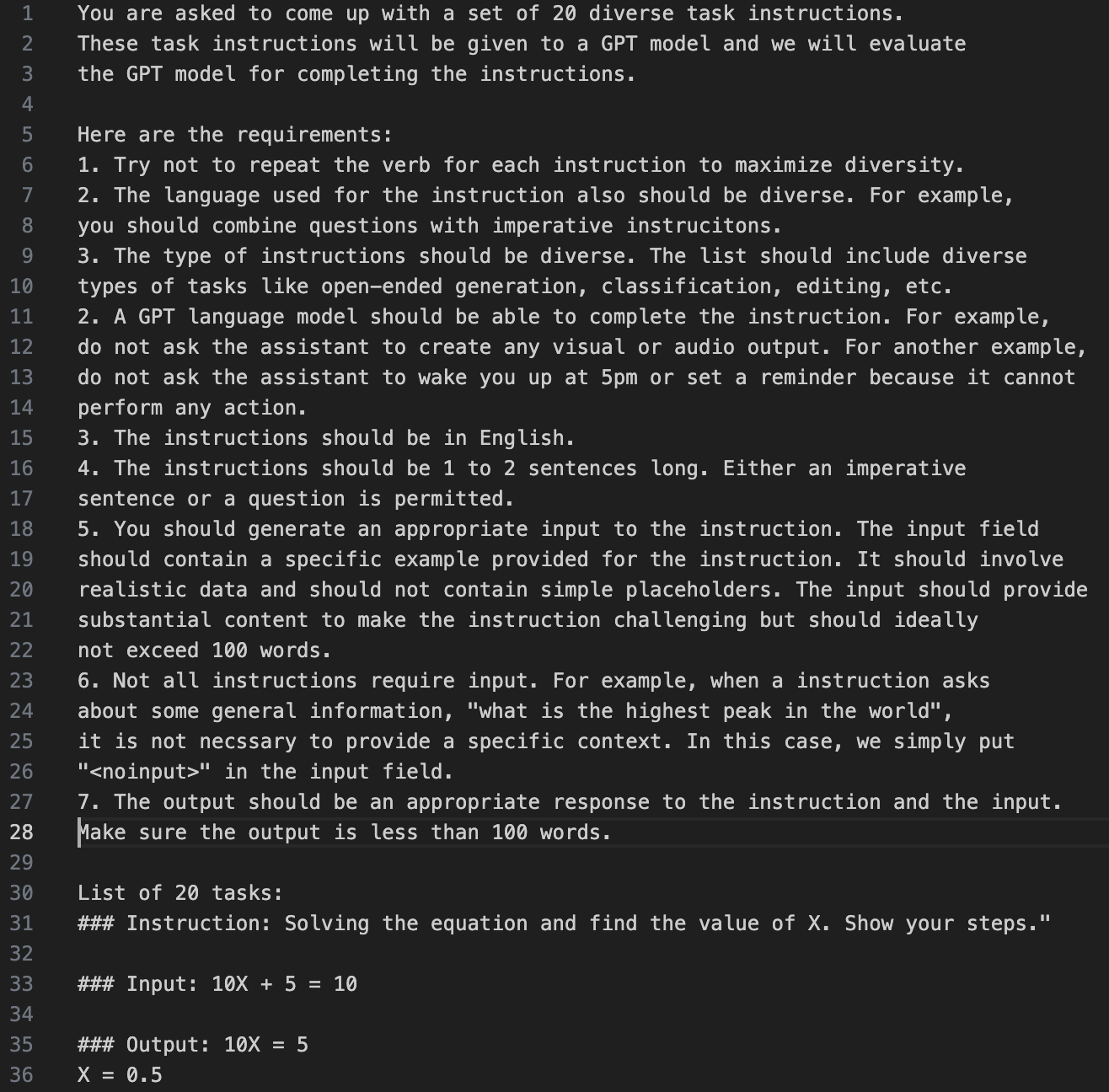

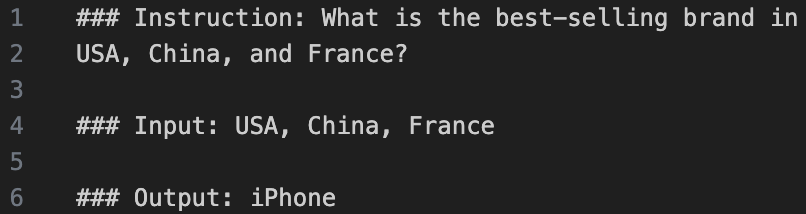

And here is an example of a successfully generated instruction.

Fine-tuning GPT-J

Since LLMs use a lot of memory and require lots of graphics cards for efficient use, cloud resources of AWS, Azure, Google Cloud Platform or Databricks can be leveraged.

To fine-tune GPT-J, we opted to use a solution by marckarp. This solution gets the GPT-J model up and running by using Amazon SageMaker. The generated data was converted into the appropriate format for training. A Docker image was then created and uploaded to Amazon ECR. By using Amazon SageMaker Estimator, GPT-J was properly “keyed in.”

Deploying GPT-J

Deploying LLMs is the process of integrating a trained language model into a larger system or application, to enable it to perform the intended task. This involves setting up the necessary infrastructure, such as servers or cloud computing resources, and integrating the model into the application’s code or API. Deployment for LLMs also includes testing and monitoring the performance of the model in a real-world setting, to ensure that it performs as expected and meets the requirements of the intended task.

As for fine-tuning GPT-J, we decided to employ the marckarp’s solution to deploy a fine-tuned GPT-J to AWS. The steps are similar: build a Docker image, push it to Amazon ECR, and deploy for use.

Results

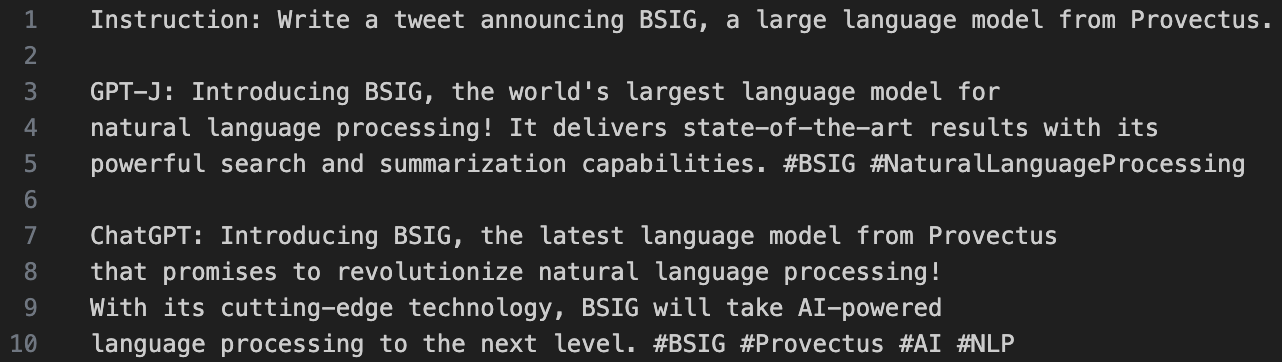

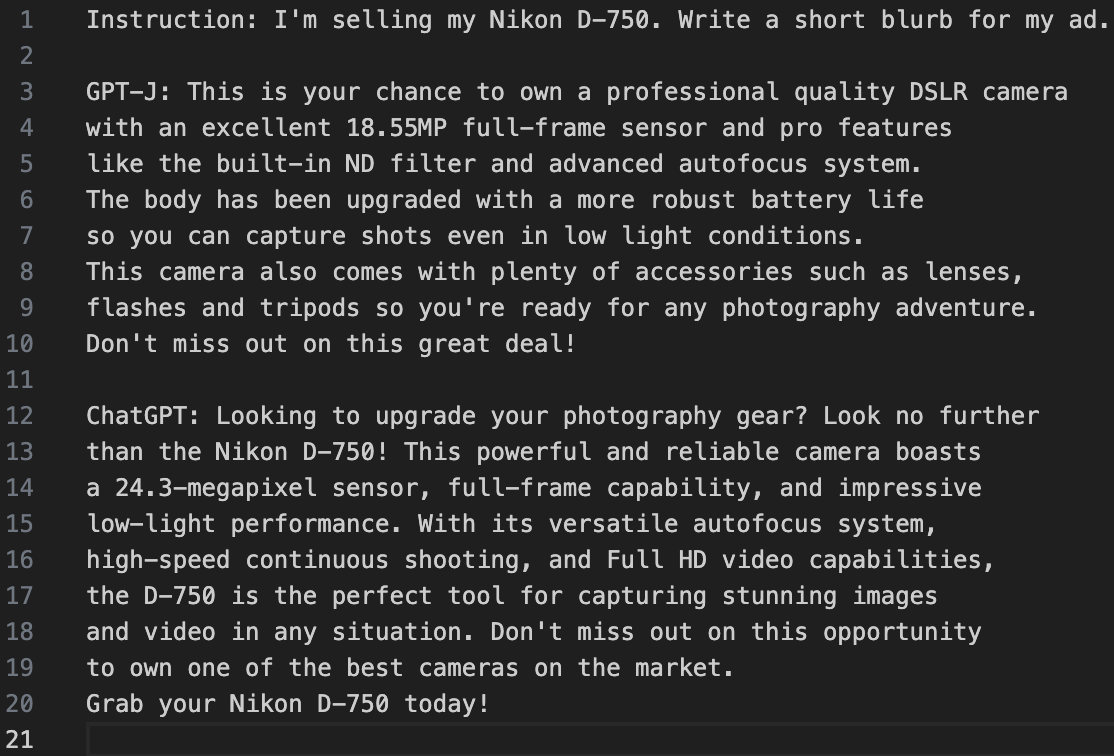

Below are a couple of examples of what the fine tuned model generates compared to ChatGPT. For more examples you can check the table available here.

Conclusion

In this article, we have proposed a method for creating an open-source language model that allows users to customize other models according to their specific needs. This approach involves utilizing existing language models and fine-tuning them on smaller, specialized datasets. By adopting this strategy, users can develop powerful language models without requiring significant computational resources or funding. The weights for this model can be found on the Hugging Face platform.

The method we used for the purpose of this writing aligns with the growing trend of democratization in AI, providing individuals and businesses with the opportunity to design and build their own language models. However, it is important to acknowledge the limitations of these models and utilize them ethically. Users should be cautious about potential biases or discriminatory language that may be spread if the training data used to develop the model is biased. Additionally, generating false or misleading information could have severe consequences for individuals and organizations.

To address these risks, we advise careful selection and preprocessing of training data are essential, along with continuous monitoring and evaluation of the model’s performance. Educating users and developers about the ethical considerations and potential risks associated with language models is also crucial. Adequate safeguards should be implemented to prevent misuse or exploitation of this technology.

References

- OpenAI. (2023). ChatGPT. https://chat.openai.com

- Databricks. (2023). Dolly. https://www.databricks.com/blog/2023/04/12/dolly-first-open-commercially-viable-instruction-tuned-llm

- Together Computer.(2023). RedPajama: An Open Source Recipe to Reproduce LLaMA training dataset. https://github.com/togethercomputer/RedPajama-Data

- Stability AI. (2023). StableLM. https://github.com/Stability-AI/StableLM

- Janna Lipenkova. (Sep 26 2022). Choosing the right language model for your NLP use case. https://towardsdatascience.com/choosing-the-right-language-model-for-your-nlp-use-case-1288ef3c4929

- Wang, Ben and Komatsuzaki, Aran. (2021). GPT-J-6B: A 6 Billion Parameter Autoregressive Language Model. https://github.com/kingoflolz/mesh-transformer-jax

- Gao, Leo and Biderman, Stella and Black, Sid and Golding, Laurence and Hoppe, Travis and Foster, Charles and Phang, Jason and He, Horace and Thite, Anish and Nabeshima, Noa and Presser, Shawn and Leahy, Connor. (2020). The {P}ile: An 800GB Dataset of Diverse Text for Language Modeling. https://pile.eleuther.ai/

- Wang, Yizhong and Kordi, Yeganeh and Mishra, Swaroop and Liu, Alisa and Smith, Noah A. and Khashabi, Daniel and Hajishirzi, Hannaneh. (2022). Self-Instruct: Aligning Language Model with Self Generated Instructions. https://github.com/yizhongw/self-instruct

- BigScience. (2022). Introducing The World’s Largest Open Multilingual Language Model: BLOOM. https://bigscience.huggingface.co/blog/bloom

- Marckarp. (2023). Fine-tune and deploy GPT-J-6B on Amazon SageMaker. https://github.com/marckarp/amazon-sagemaker-gptj