June 8, 2023

11 min read

Exploring Intelligent Search Solutions: A Comparative Analysis of Amazon Kendra Integration and Large Language Model Crawlers

Author:

Alexander Demchuk, Software Architect at Provectus

Amazon Kendra and LlamaIndex can help with knowledge integration but fall short in connecting diverse knowledge sources, to enable efficient intelligent search. In this article, we compare the existing solutions and explain how to overcome their limitations using a Google Drive crawler.

Companies often face difficulties in consolidating their knowledge base when their data is spread across various knowledge storage platforms like Google Drive, Wiki Confluence, Notion, etc. The main obstacle is that each of these platforms has their own unique APIs or query languages. This requires a solution that can unify data sources under a common structure, with a universal query language, preferably a human language with inherent “intelligence.”

There are multiple tools and applications that facilitate knowledge integration, either as a built-in feature like Amazon Kendra, or via existing connectors and crawlers, as with tools like LlamaIndex and LangChain that use large language models (LLMs) for intelligent search. But while these tools provide a variety of crawlers for known data sources, they do not have all the necessary connectors for diverse knowledge sources.

Our company has multiple sources of data, so we applied Generative AI to build a general search system, and to build missing crawlers or enhance existing ones, in order to avoid limitations while remaining compliant with our data security policies. We have some data in Google Drive, and we wanted to develop a semantic search tool for documents built around LLM.

This article compares out-of-the-box solutions like Amazon Kendra, and open-source libraries like LlamaIndex, and explains how the team at Provectus was able to overcome the limitations of LlamaIndex crawler by developing our own Google Drive crawler.

Amazon Kendra

As I delved into the world of intelligent search solutions, I decided to put two popular tools head-to-head – Amazon Kendra and LlamaIndex – to discover their unique strengths and limitations. Let’s first take a closer look at Amazon Kendra.

Amazon Kendra is a user-friendly machine learning-powered search and intelligence service offered by Amazon Web Services (AWS). It is designed to help organizations index and search all types of data sources quickly and efficiently. Amazon Kendra uses advanced techniques, such as natural language processing and computer vision, to understand and process user queries and provide accurate, relevant results in a fraction of the time it would take to do a manual search of data sources. With its intuitive interface and powerful features, Amazon Kendra is a valuable tool for organizations looking to make the most of their data.

Here are some major features and benefits of Amazon Kendra:

- Uses advanced machine learning techniques, including natural language processing, computer vision, and deep learning, to understand user queries and provide accurate results.

- Features a user-friendly interface that makes it easy to set up and use without technical expertise.

- Supports a wide range of data sources, including structured and unstructured data, making it versatile and adaptable.

- Offers connectors for popular data sources, such as SharePoint, Salesforce, and Amazon S3, to make it easy to integrate with existing systems.

- Provides out-of-the-box features such as type-ahead search suggestions, query completion, and document ranking to improve the search experience for users.

Limitations and drawbacks of Amazon Kendra include:

- It may be expensive for small businesses or organizations with limited budgets, particularly if indexing large volumes of data is required. Amazon Kendra’s pricing is based on a combination of the number of documents to be indexed and the number of monthly queries. Indexing: You pay per GB of data indexed per month. The price varies based on the data source, with different rates for webpages, Amazon S3 objects, and databases. Querying: You pay for each query made to Amazon Kendra. The price varies based on the number of queries per month, with discounts available for higher usage tiers.

- It may require customization to work with specific data sources or search requirements, which could be time-consuming and may require technical expertise. Setup is pretty straightforward, and is done using either AWS Management Console or CLI. Custom crawlers are also simple — here is a good explanation of how to add yours: Adding documents to Amazon Kendra index with boto3.

- Amazon Kendra is a fully managed service. It is a sort of “black box” that you cannot fully control and tune (e.g. change its model, use multiple models, etc.)

LlamaIndex

While Amazon Kendra is a powerful intelligent search solution, it is not the only available option. Let’s look at one alternative, LlamaIndex, and review its features and capabilities.

LlamaIndex is an open-source project that helps connect LLMs with external data sources. It is built using the same technology as GPT and makes it easy to connect LLMs to databases, APIs, PDFs, and more. LlamaIndex has a Python package that makes it easy to connect your LLM to various data sources, and it also has a data loader hub called Llama Hub (llamahub.io). Llama Hub is a community library of data loaders for LLMs, available on GitHub. It is designed to simplify connecting LLMs to all sorts of knowledge sources.

Llama Crawlers

To make a proper comparison between Amazon Kendra and Llama Index solutions, I showcased the unique features and capabilities of LlamaIndex by building my own Google Drive Loader and utilizing it for data indexing. You may wonder why I didn’t simply use an existing solution, and I want to make it clear that I am not discouraging the use of community-provided solutions. Our decision to build a custom crawler stems from certain specific requirements and limitations that we encountered along the way that an existing data loader could not cover.

Before delving into the finer details of implementation and discussion, it is worth taking a moment to discuss how you can add your custom loader to the Llama Hub. Fortunately, this is a straightforward procedure that involves creating a dedicated folder for your loader and including a base.py file with your original code. From there, you can reference your loader in the library.json file, which is used by Llama Index’s download_loader function. For more information on this process, please check out this link.

I do not intend to publish my crawler to Llama Hub due to certain creation compliance issues. Nevertheless, it overcomes some of the existing limitations of Google Drive Crawler that make it less than ideal for certain use cases, and showcases how to avoid them.

Here are a few of the most notable limitations of LlamaIndex’s Google Drive crawler:

- Data loaders are shipped via a base.py file, which means that all code parts are combined in one file, making it hard to maintain, debug, etc. Either packaging or a clear structuring would help to solve this problem.

- Llama’s Index Google Drive crawler does not have a service account auth, which is a convenient authentication method. You simply create a service account and share folders to be indexed; in our case we were only allowed to use setup service accounts.

- Crawler is rigidly configured, and is unable to configure file types to read, or to restrict them from being read. It would be great if you could bypass this configuration into crawler class, and our implementation allows us to do so.

- Storage space. The majority of folder-like crawlers, including Google Drive, download files to the local file system behind the scenes and then crawl them by means of SimpleDirectoryReader, which in turn can load a lot of known format readers. Extra space may be a concern, especially if you run your crawlers in a container and have volume restrictions. So instead of downloading all our Google Drive content into a local folder, we just reused available memory to store the content in the LlamaIndex Document entity, and added them to the index chunk by chunk.

Custom Google Drive Crawler

In this section, we will dive deeper into the implementation details of our custom crawler and explore both the strengths and limitations of LlamaIndex’s solution. By examining these flaws and benefits, I want to provide a more comprehensive understanding of the tool’s capabilities and inspire ideas on how to improve its architecture and performance. For more information about existing implementations, please visit the official llama-hub GitHub repository.

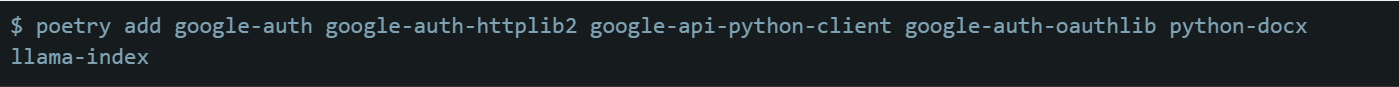

To build a Google Drive reader, you will need to use the Google Drive SDK. Since I am using Python, I will be using the Python version of the SDK. To get started, you need to install the following packages – I’m using the Poetry package manager, but the choice is up to you.

I have also added a couple more packages that we’ll need later, like python-docx and llama-index. I’ll cover limitations by providing our code samples.

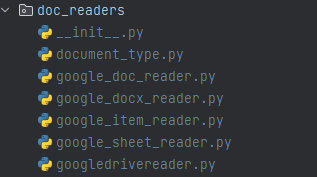

As previously mentioned, a data loader in Llama Index is implemented through a single base.py file where all components are defined in one place. While this approach can be effective for certain use cases, I believe there is room for improvement. Specifically, by adopting the Template Method pattern and defining a parent loader, we can create a more polymorphic system that allows for greater flexibility and ease of use. With this approach, users only need to implement a single method for extracting file content, while all other components can be standardized and shared across multiple loaders. Here is the proposed folder structure and parent loader abstraction.

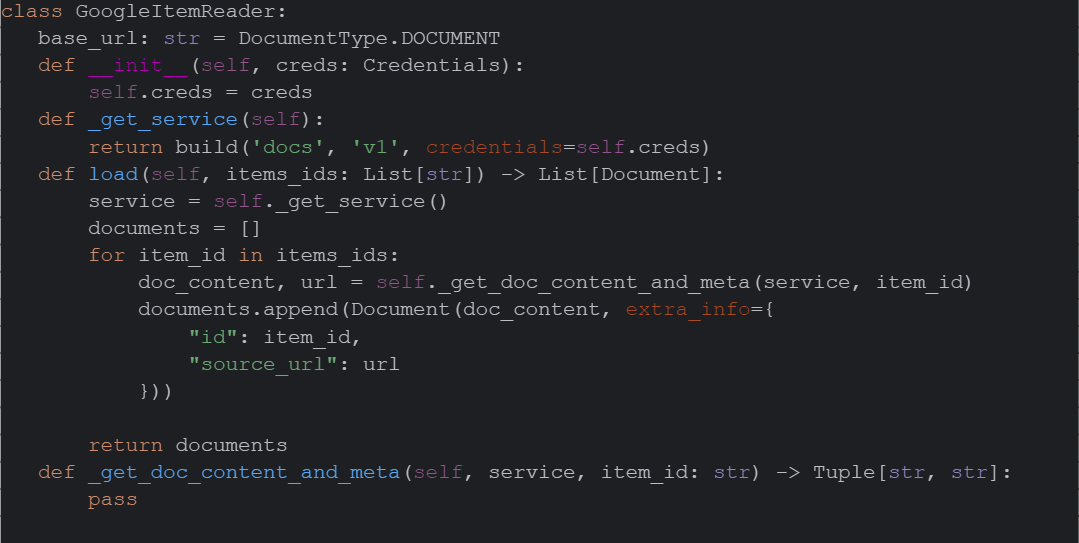

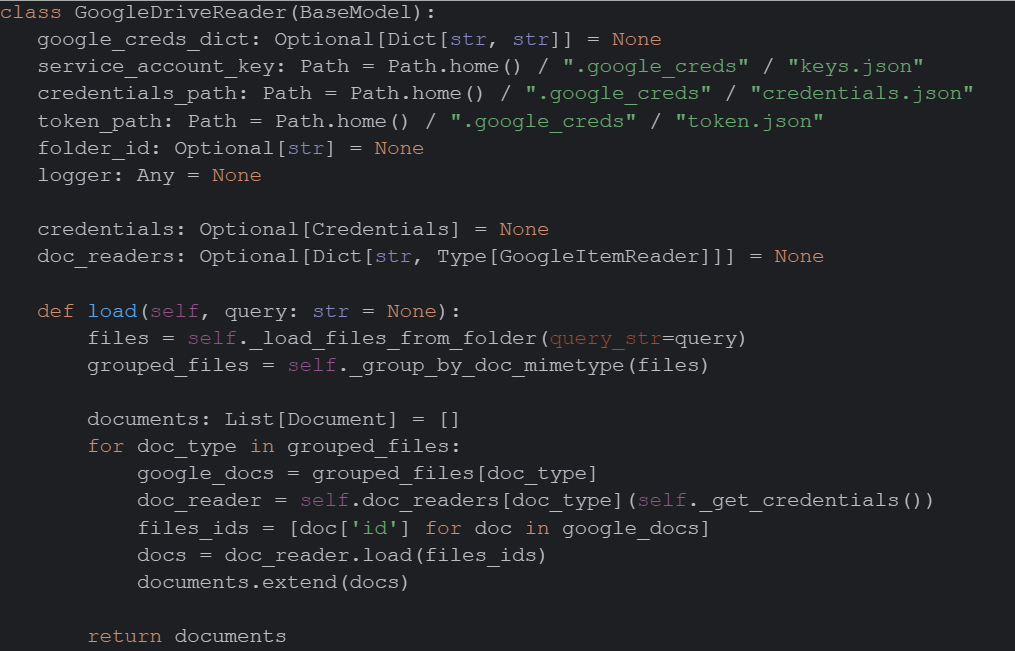

Where google_item_reader.py defines GoogleItemReader class with following interface, whereas the main invocable method is load and _get_doc_content_and_meta is a method for defining content retrieval actions. All listed classes inherit GoogleItemReader, and googledrivereader.py is a “folder” reader that combines either all other readers, or one that is just configured.

I won’t provide all implementation information, but here are a few examples explaining crawler tweaks; all code can be found here in my repository.

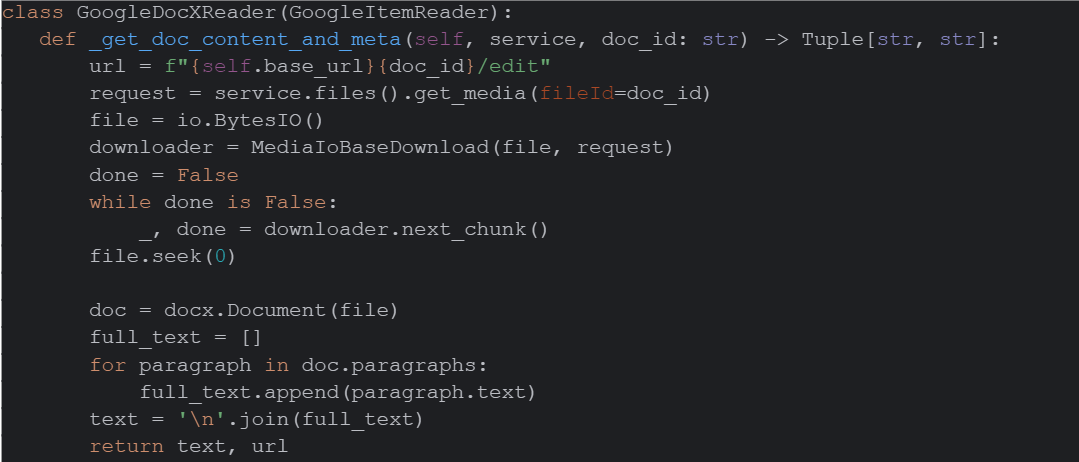

Here is a GoogleDocXReader that showcases polymorphic advantages and also addresses storage space limitations by loading document content into memory by chunks.

Eventually, your Google Drive reader should look like this. Here you could easily bypass different auth options, and a credentials instance itself with doc readers configuration. You might notice that doc readers can be used independently, so if you need to read only one type of document, you simply provide doc IDs, which is what you have in LlamaIndex documents.

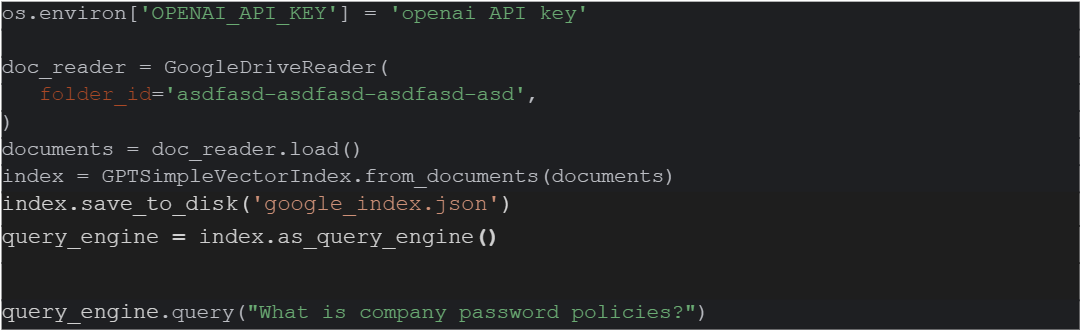

That is pretty much all you need to crawl Google Docs and generate LlamaIndex-based documents. Once you are done with documents, it is easy to build an index, or vectorize documents and query the knowledge information you have.

By default, LlamaIndex uses the OpenAI LLM model, requiring only an OpenAI API key to get started. However, the tool is not limited to this model alone. In fact, LlamaIndex features a range of tools for connecting to various LLMs, providing users with greater flexibility and choice in their search capabilities. In the example below, I use a simple vector index that stores vectors in a basic JSON file. With this approach, multiple indexes can be combined into a single comprehensive index, with routing guidelines established for every section of the index.

While this is a robust and viable option, there are still a few tweaks that can be applied to the indexing process to optimize performance.

- Instead of GPTSimpleVectorIndex, a proper VectorDB (Pinecone, Qdrant etc) could be used to speed up query performance

- You could play around with different index types that better fit your purpose. (Here are several index use cases to check out.) On top of that, you can use query engines for query transformation to make your requests to LLM even more clear

- Indexes could be separated by knowledge domain and composed by a composable graph that improves the accuracy and performance of the query

Before closing this section, I would like to share a few tips and tricks for deploying LlamaIndex on AWS, including some best practices we have found to be effective:

- Crawling and indexing is a recurring job that should be scheduled for optimal performance. For small data sources, AWS Lambda can be a good option. For more prolonged crawling, consider using tools like Airflow, multiple Lambda invocations, or Amazon ECS tasks. We found Amazon ECS tasks to be the easiest solution to implement, and we recommend using Event Bridge Rules for scheduling.

- Index querying service is handled by AWS Lambda, as it simply performs queries on the indexed data.

- For messenger bots like Slack or WhatsApp, a bidirectional connection is needed in order to send and receive messages. We found that Amazon ECS containers provide a reliable and effective solution for managing these connections.

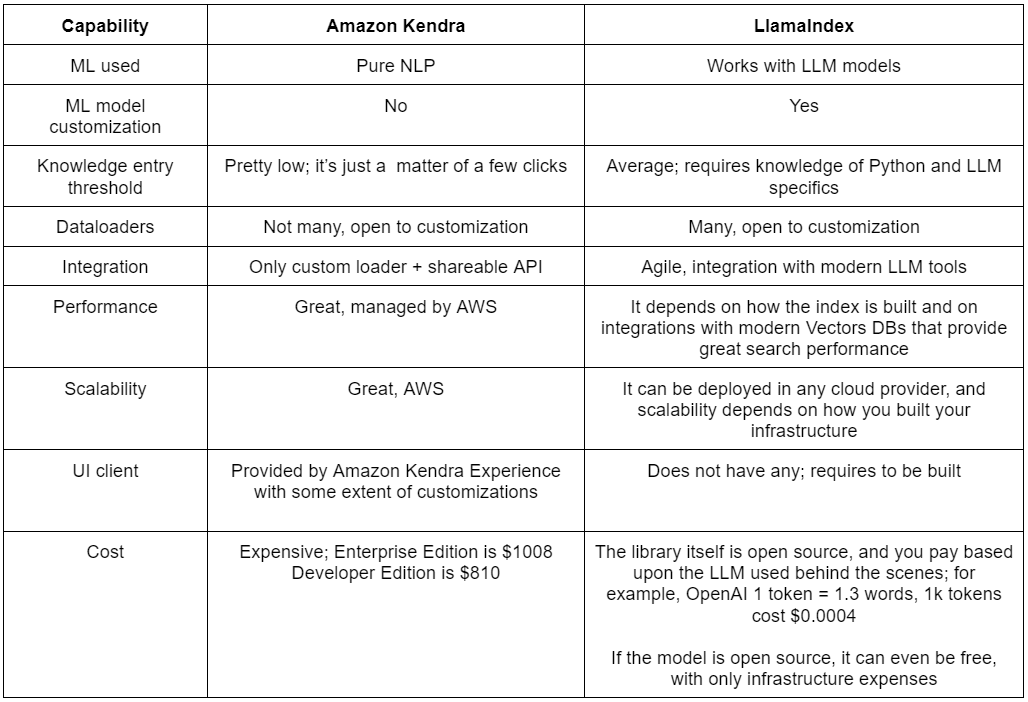

Amazon Kendra vs. LlamaIndex

We have evaluated both solutions and their unique strengths and weaknesses. I have compiled these factors into a comprehensive table for easy comparison.

Conclusion

With a comprehensive understanding of the strengths and weaknesses of both the Amazon Kendra and the LlamaIndex solutions, it’s up to you to decide, based on your specific business requirements and capabilities.

Businesses seeking to augment their search capabilities without the intricacies of constructing complex NLP models and crawlers can consider Amazon Kendra as a viable solution. It features a user-friendly interface coupled with potent machine learning capabilities. Amazon Kendra enables businesses to swiftly index and search through various data sources without demanding extensive technical expertise or resources.

Despite potential budget constraints, businesses possessing technical expertise can build dynamic, adaptable solutions using LlamaIndex. This robust tool leverages the full potential of LLMs, facilitating the creation of autonomous agents capable of performing various tasks, such as search and calculations, in response to natural language requests. With its versatile capabilities, LlamaIndex enables businesses to maximize their data utility without overstretching their financial resources.

At present, the Provectus team is enhancing our knowledge base assistant by integrating agents to further improve its intelligent search capabilities. We are also invested in analyzing and evaluating different Large Language Models (LLMs).

Stay tuned for more insights!