October 7, 2022

16 min read

Data Quality and MLOps: Deploying Reliable ML Models in Production

Author:

Provectus, AI-first consultancy and solutions provider.

Today organizations in sectors as diverse as finance and manufacturing want to tap into artificial intelligence to gain a competitive edge and streamline their operations. They hire multiple teams of data scientists and ML engineers, arming them with the best tools to build and deploy models quickly and efficiently. Companies invest in large-scale ML infrastructure projects to create a robust foundation for their AI initiatives, but many find it hard to implement AI at scale.

This article takes a closer look at certain factors that impact AI projects, including: data quality for building ML models; specifics of how data and models are tested; and availability of data validation mechanisms in MLOps. We begin by explaining why ML models cannot be deployed to production without a clear Data QA strategy, recommend ways of organizing your data teams around product features to make them fully responsible for Data as a Product, and circle back to designing Data QA components as an essential part of your MLOps foundation.

Data Quality and Why It Matters

Data scientists and ML engineers fully understand the concept of “garbage in, garbage out.” When nonsense data is entered, you get nonsense output. But data can be boring, and most IT professionals assigned to AI projects would rather build models than labor over mundane data work on a daily basis.

However, giving data low priority is a critical error.

According to Data Cleaning: Overview and Emerging Challenges, the most advanced algorithms compete for improved accuracy within a fraction of a percent, while data cleansing contributes 20x more to the quality of the final solution. A paper by Google entitled Everyone wants to do the model work, not the data work: Data Cascades in High-Stakes AI emphasizes the importance of data, stressing that:

- Finding errors in data early on is critical

- Even small unmanaged errors made in the early stages of workflow can lead to significant degradation of a model’s performance in production

- Metrics computed during model testing measure not only the models and algorithms in isolation, but the entire system, including data and its processing

In other words, you can tweak your models, but if your data is irrelevant and dirty and you do nothing to fix it, your AI solution is guaranteed to generate less than accurate results.

With this in mind, let’s take a look at a typical ML workflow.

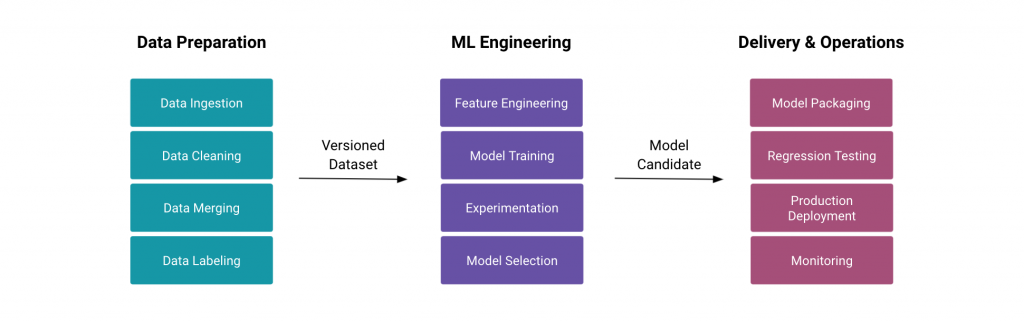

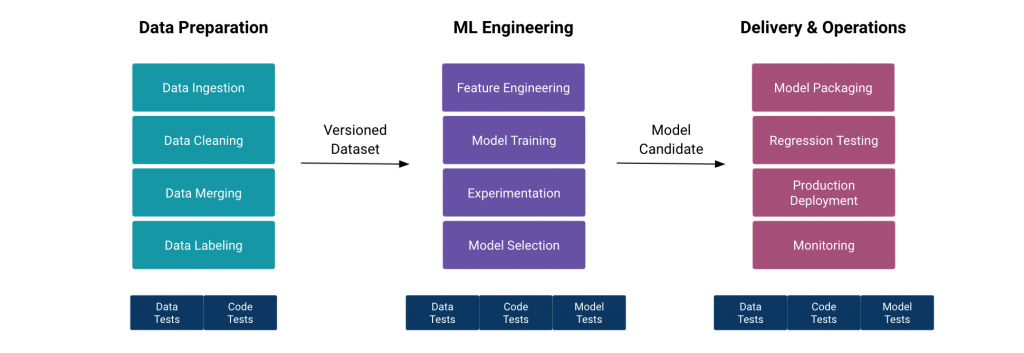

It begins with a data preparation stage (Ingestion, Cleaning, Merging, Labeling) that produces a versioned dataset ready for ML engineering. At this stage, feature engineering, model training, experimentation, and model selection are done, before the model is promoted to operations.

Every stage of the machine learning lifecycle should be covered with data tests, code tests, and model tests. Data tests are essential, since they serve as a part of the business logic.

The theory behind data quality is not really challenging, but ensuring the integrity of your data may be harder than you think. If you lack the right data in the first place and, most importantly, do not know the framework for kicking off the Data QA process, you can be setting yourself up for failure.

Challenges and Solutions to Data Quality Problems

#1 How Do I Find the Right Data for ML Models?

Before you start assessing the quality of your data, make sure you have the right data to build your machine learning models.

Organizations realize the importance of having their data properly collected, stored, and processed. They invest billions of dollars in data warehouses, data lakes, and analytics and machine learning platforms. However, when they need to find a reliable data source for AI/ML, they often find themselves at a crossroad.

Choosing the right data requires an understanding of the business problem, and then framing the machine learning problem as a key component in the business context. You first need to look at your available data and do an exploratory analysis.

More often than not, businesses start working with the wrong data. Inconsistent, incomplete, or outdated data always leads to incorrect assumptions and skewed problem framing in the early stages of development.

To illustrate the point, imagine that you have a dataset called “User Profile” with 200+ features uploaded to Amazon S3. You will not be able to:

- Ensure that the dataset represents the full population

- Understand the data’s full lineage and all the transformations, aggregations, and filtering that have been done

- Locate the dataset’s owner and those responsible for SLAs, versioning, and backward compatibility

- Check if the dataset has passed any quality checks

Your investment in data and machine learning can be easily squandered by data access and discoverability issues, and by the way you use your tools to look at data.

So, how do you find the right data for your machine learning models?

It all begins with management, not technologies and tools.

By default, monolithic data lakes and data warehouses may not be the perfect solutions for ensuring data quality, due to:

- Lack of domain context in a centralized data engineering team. Usually, a specialized team of data engineers who implement ETL from multiple sources into a data lake are in charge of all data pipelines, and all data validations for all products. If they do not understand the business context, they cannot test data properly.

- Lack of ownership and product thinking of data. What will happen to your data pipelines if a web developer decides to change the column type from Number to String? Web developers may not be aware that RDS transactions and clickstream events are being used for critical real-time decision-making by your sales and marketing teams.

The first solution is to migrate to data mesh. Data mesh helps to converge a distributed domain-driven architecture, self-serve platform design, and product thinking, with data in one place.

Here are a few advantages of data mesh compared to data lakes and data warehouses:

- Brings data closer to domain context. The mesh paradigm recommends grouping data engineers and ML engineers in product teams, to ensure that data and data pipelines are owned by domain experts.

- Introduces the concept of Data as a Product and data contracts. In ways similar to when product teams design, version, and manage REST APIs, the teams become fully responsible for keeping data valid, consistent, and up to date.

The second solution is to invest in a global data catalog that can serve as an entry point for your product managers, and data and ML engineers.

Data catalog is a critical missing piece in most reference architectures. There is no clear leader in the market today, but Amundsen, Apache Atlas, and DataHub are worthy of mention.

Modern data catalogs should support end-to-end lineage, from raw data to ML models, and they should provide quality metadata for every data asset. They should help engineers easily give answers to the following questions:

- Does a particular data point exist? Where is it?

- What is the data’s source of truth?

- Which individual or team is the owner of this data?

- Who are the users of the data?

- Are there any existing assets that can be reused?

- Can I trust this data?

#2 How Do I Get Started with Data QA for ML?

Let’s say you have a valid source of data to use in your ML models. How do you start testing this data to ensure accuracy and efficiency of the models? We can go even further and expand this question:

- What exactly do you need to test?

- How can you approach data testing in the most efficient, repeatable, and reproducible manner?

- Who should test data and ML models, since the titles of ML QA or Data QA are scarce (or non-existent, whatsoever)?

The bottom line is:

Even if your company understands the importance of data quality assurance, it can be challenging to get started, because the field is new and the terrain is a bit wild.

For those who have been working with data for a while, default data quality checks are nothing new. Data analysts usually validate data for duplicates, missing values, format errors, and different types of integrity lapses before generating a report.

The problem with this traditional approach is that most of the work is done as one-off scripts, in notebooks or SQL clients. When the number of SQL scripts reaches a critical mass, they become difficult to manage, support, and operationalize. You cannot efficiently scale your data validations if your data is spanned across Apache Kafka, Spark processing and data lakes, and ends up in Snowflake, RDS, and ML pipelines. There is no way to holistically reuse and maintain even default quality rules as SQL scripts for all those systems.

More advanced methods for data validation include tests for data distributions; for example, when you need to ensure that your training dataset’s distribution matches the distribution of your production data. Other options are:

- Kolmogorov-Smirnov tests, as well as Chi-squared tests for categorical features

- AutoML that applies automation to choose the best outlier detection algorithm for a particular type of data

- Heuristics-based automatic constraints generation and data profiling for complex dependencies are options to consider, but they are in the early R&D stage in the community

We recommend you start with the basics and then explore more advanced methods.

Sorting out data ownership issues, introducing best practices for Data as a Product, and implementing the most common data quality checks, versioning, and SLAs will make a huge difference for your data, ML, and Ops teams.

Now let’s delve into ways you can test your data.

Manual data quality checks are tedious, time consuming, and error-prone, especially if you have to write validation rules from scratch. The required efforts are multiplied if:

- Your datasets are extensively used but the documentation is poor

- Schemas are continually evolving

It is recommended to utilize an unsupervised approach that can be summarized in four steps:

- Run data profilers that produce summaries per column

- Use the summaries to generate suggestions for data checks; for example, numeric values can be disguised as text; there can be no null values; specific values can fall within a certain range, etc.

- Evaluate these generated constraints on the holdout subset of data

- After taking all these automated steps, data engineers can review, adjust, accept, or decline these checks, and include them in a test suite

Note that the tools for automating this process are still in their infancy. New types of profilers and constraint suggestion algorithms are in the process of development.

There are several tools you can use, though.

Deequ is a library built on top of Apache Spark that enables you to profile datasets and to enforce your own constraints system. Deequ can help you solve the following issues:

- Missing values can lead to production system failures that require non-null values (NullPointerException)

- Changes in data distribution can lead to unexpected outputs of machine learning models

- Aggregations of incorrect data can lead to misinformed business decisions

Another useful tool is Great Expectations (GE), a lightweight, unopinionated data platform that helps data teams eliminate pipeline debt through data testing, documentation, and profiling. GE is a leader in terms of maturity, developer experience, community support, and adoption.

Here are a few things that GE enables you to do:

- Establish data contracts and generate data quality reports

- Integrate data quality checks as part of your ML pipelines, CI/CD, and other data routines

- Work with Pandas/Spark dataframes, Redshift, Snowflake, BigQuery, and other out-of-the-box tools

Great Expectations serves as a declarative assertion about the expected format, content, and behavior of your data. Its greatest advantage over other tools is that it decouples the rules engine from the actual backend. You can maintain rules in one place and apply them to different parts of your data pipeline. GE greatly reduces the total cost of ownership for Data QA.

Note: To learn more about Great Expectations and Provectus’ contributions to the community’s efforts to ensure data quality, check out Data Quality Assurance with Great Expectations and Kubeflow Pipelines.

Data as Part of Model Testing

Now that you know the basics of Data QA, let’s look at how data and ML models can be tested interdependently.

Any machine learning model can be treated as a blackbox application, which means that you feed your test datasets to the model and then analyze output datasets.

In theory, you should have three datasets — for training, testing, and validation. In the real world, however, you will have to handle many validation datasets. You need a separate workflow for managing datasets for quality assurance of the ML models.

If you are a traditional QA engineer, it will be easier for you to understand how this system works if you treat every row in the dataset as a test case, and the entire dataset as a test suite.

For example, here are a few datasets you may come across:

- Golden datasets for user acceptance testing (UAT)

- Security datasets with adversarial inputs

- Production traffic replay datasets

- Regression datasets

- Datasets for bias

- Datasets for edge cases

Bear in mind that you need to test datasets used for model testing separately.

There is some level of duality in model testing: When you test any model, you also test all datasets that the model was built from. For example, you can look at model bias as an algorithmic bias or a dataset bias, but the important thing is to check for both.

Speaking of testing for bias…

Bias is a disproportionate weight in favor of or against an idea or thing, usually in ways that are closed-minded, prejudicial, or unfair. There are more than ten different types of bias.

For example, when you train a model, the training dataset you are working with may not represent the actual population, which is selection bias. Or, you can create annotation questions for your data with a particular slant favoring one group over the other, which is called a framing effect. In case of systematic bias, you yourself become subject to consistent deviations and repeatable errors in data.

In some cases, there is bias for or against outliers or filtered data. Your model can also be biased toward default values used for replacing missing values.

You can deal with most types of bias during the Data QA process by constructing specific data validation rules, or by analyzing training metrics and metadata. More complex bias issues caused by the personal perceptions of data scientists and framing effects from data annotation questions can be resolved by data analysts and subject matter experts.

Now let’s talk about some of the tools you can use for model testing.

The first category is Adversarial Testing & Model Robustness:

- Cleverhans by Ian Goodfellow & Nicolas Papernot

- Adversarial Robustness Toolbox (ART) by DARPA

The second category is Bias and Fairness:

- AIF360 by IBM

- Aequitas by The University of Chicago

- Amazon SageMaker Clarify

Most of these tools are used by researchers on MNIST datasets, and their adoption in production for real applications is limited.

Bias and fairness are a complex R&D problem that cannot be solved overnight. For a quick start, we recommend offloading your bias work to a fully managed service like Amazon SageMaker Clarify.

Note: To learn more about bias, fairness, and explainability in AI, check out Amazon AI Fairness and Explainability Whitepaper.

Data QA and MLOps

We have discussed the tools and best practices for model and data testing. Let’s see how you can implement these practices in your end-to-end MLOps platform.

To begin, let’s review what MLOps is, and how it works from a technology standpoint.

MLOps is a set of practices for smooth collaboration and communication between data scientists and operations professionals, to help manage the ML production lifecycle and enable businesses to run AI successfully.

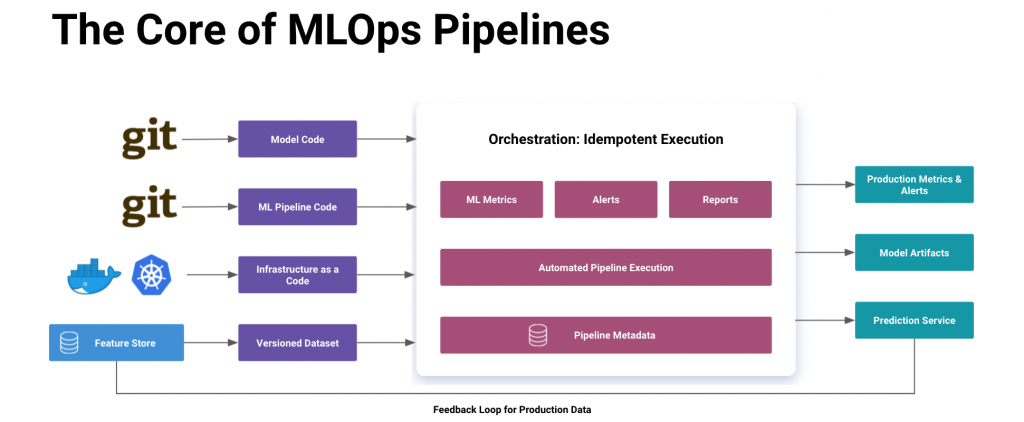

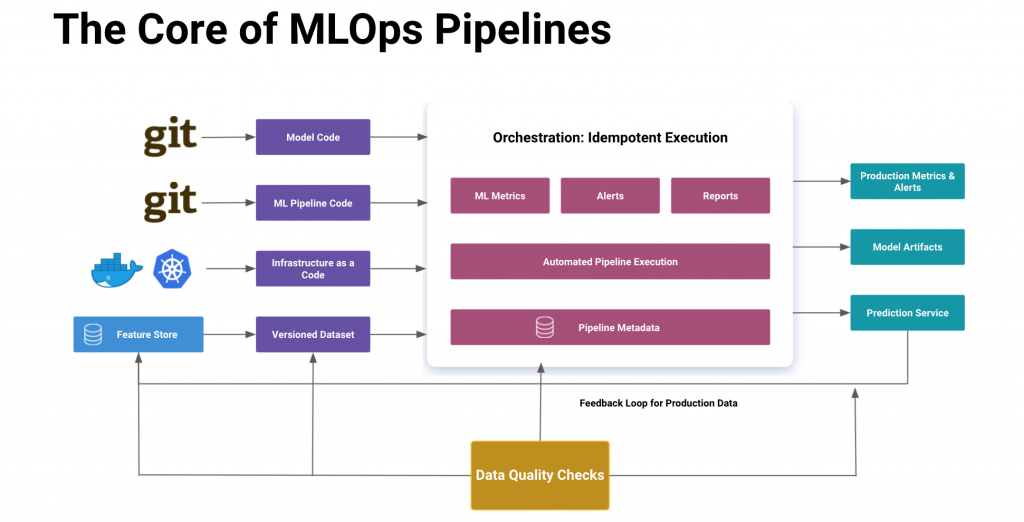

The core of MLOps is made up of four major components (inputs):

- ML model code

- ML pipeline code

- Infrastructure as a Code (IaC)

- Versioned dataset

All your code needs to be stored and versioned in Git. You should also have a fixed, immutable, and versioned dataset as an input for your ML pipeline. The dataset is usually generated from a centralized feature store.

Your ML Orchestrator serves as a meta-compiler that takes these four inputs and compiles them into a single model. As with any compiler, it outputs logs, metrics, alerts, and various metadata, to be stored and analyzed.

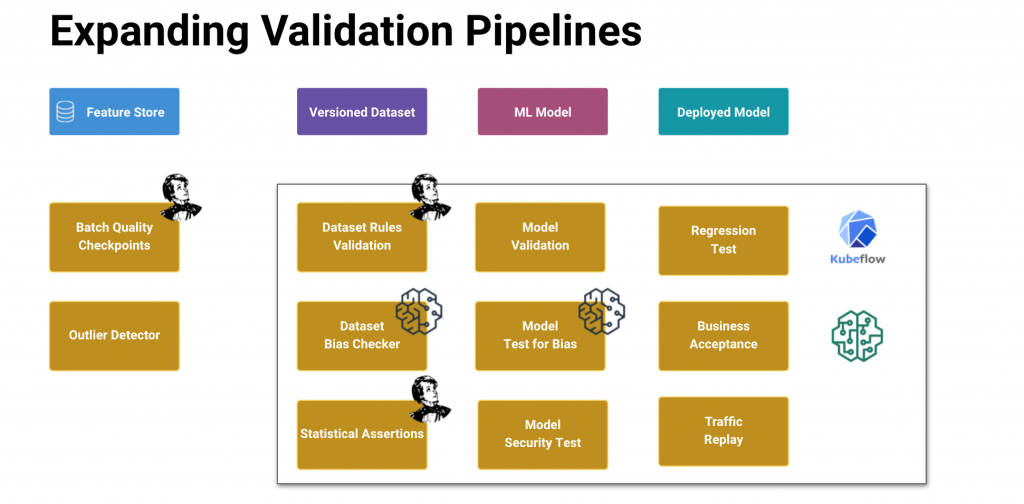

To ensure data integrity, you need to embed data quality gates into every step of your ML pipeline, from monitoring and checkpointing features in the feature store, to validating the training dataset and analyzing model training metrics, to finally, monitoring actual model predictions in production.

Implementation-wise, data validation jobs can be deployed as a separate process that periodically scans the feature store, or they can be an actual part of the Kubeflow pipeline.

As mentioned earlier, we recommend using Great Expectations for Kubeflow as a day-to-day tool for Data QA for developers.

We also recommend using the fully serverless components of the Amazon SageMaker platform. SageMaker Processing and Training Jobs can be used to offload heavy workloads related to data preparation, validation, and quality checks. You can rely on ready-to-use, pre-built images with frameworks like Apache Spark, but you can also bring your own containers from other libraries. Processing Job contracts are quite flexible, making it easy to scale, manage, and monitor workloads.

Amazon SageMaker Clarify can be used to generate bias and model explainability reports. Use it as a quality gate, and to gain greater visibility into your training data and models.

Amazon SageMaker Model Monitor can help you handle model endpoints. Configure your production data capture process and use it to enable data and model quality checks, and detect bias and feature attribution drifts on the actual production data. Bear in mind that you can cover most of these tasks with platform tools like SageMaker Clarify or a pre-built Deequ container, but there are also custom options for using your own monitoring algorithms.

Conclusion

Ensuring that your data and your models are properly tested may not be an easy task, but it is necessary for ensuring that your AI solutions and ML-based applications deliver accurate results and predictions.

Here are three recommendations to help guide your team along the path to AI transformation, to become a data quality-focused organization:

- Wait to deploy ML models to production until you have a clear Data QA strategy in place.

- As a leader, focus on organizing data teams around product features, to make your teams fully responsible for Data as a Product. A lot of problems can be solved on managerial and cultural levels.

- Design Data QA components as essential components of your MLOps foundation. This cannot be done in isolation by a separate QA department, and traditional QA engineers are not trained to handle ML workloads.

Bear in mind that Data QA is only one of the practices required to ensure smooth AI adoption. To scale AI across your organization, you will need to strip away the complexities of moving models to production, handling them as part of specific AI applications, and interpreting their predictions in a business context.

AI adoption is driven by both pressure and opportunity. Start by helping your engineers, operations teams, and business units to make the most of the data they deal with. You can use the information provided here to take the first steps.

To learn more, kindly check out the webinar Data Quality and MLOps: How to Deploy ML Models in Production.

.png)