October 1, 2021

2 min read

Bringing Feast 0.10 to AWS

Author:

Provectus, AI-first consultancy and solutions provider.

IT organizations that build production ML systems want to efficiently serve multiple models in real time and at scale. They need feature stores to provide access to offline training data and online serving data, to bridge the data silos by sharing and reusing features within their organizations. By using a feature store, they hope to reduce Total Cost of Ownership while accelerating Time to Value for their ML products.

However, feature stores are not that easy to deal with, and they create a lot of infrastructural overhead.

Provectus shared our vision of feature stores back in December 2020. In an article entitled Feature Store as a Foundation for Machine Learning, we argue that a feature store should be delivered as a lightweight API or even a library, nicely integrated with the existing infrastructure. It should not create additional infrastructure silos that bring opinionated technologies like Apache Kafka or Delta Lake into the picture. If your data platform is built on AWS and most of your offline features are stored in Amazon Redshift, you should be able to start using Feature Store APIs on top of that infrastructure.

Our vision of feature stores perfectly aligns with those of Mike DelBalso of Tecton and Willem Pienaar of Feast. Provectus has joined forces with the team to bring Feast to AWS.

In this article, we provide a short demo of Feast 0.10 for AWS, to share the results of our collaboration.

Demo

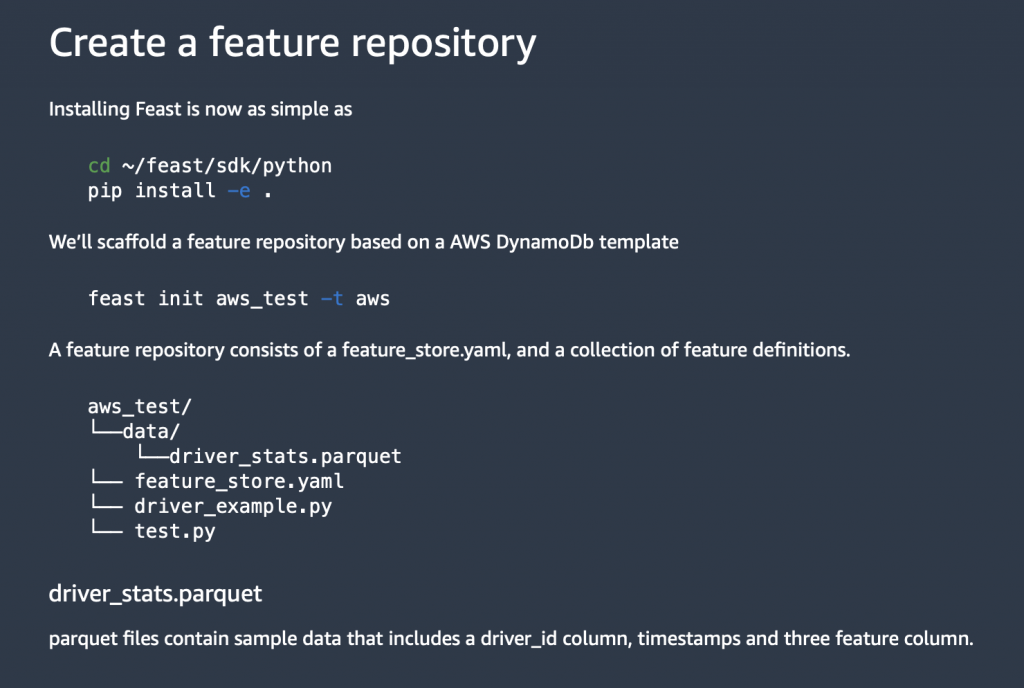

Here is how you can create a feature store repository and install Feast on AWS.

Here is an AWS account in Amazon SageMaker Studio with a preinstalled Feast 0.10 SDK.

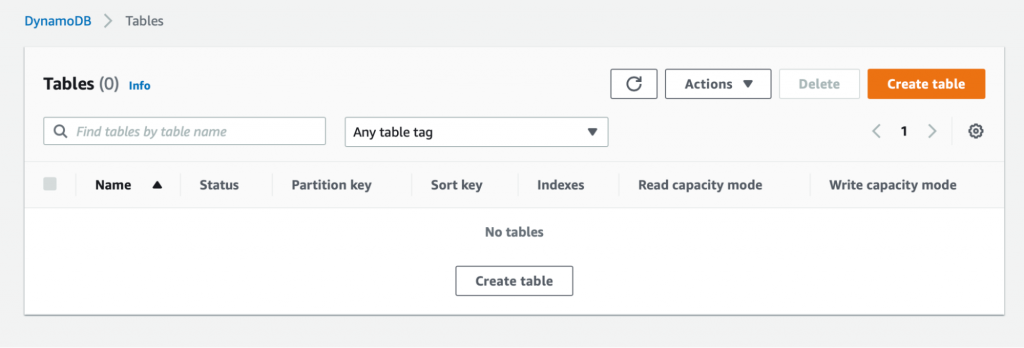

We use an Amazon S3 folder to store the data. Here is an empty DynamoDB console. We expect that DynamoDB tables will be synchronized with our online feature store definition and feature views from Feast definition.

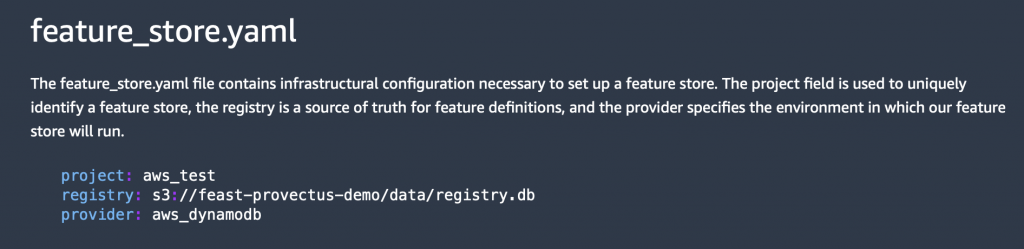

Here we will run through a default Feast demo with one modification, but we will use a DynamoDB provider as an online feature store provider and Amazon S3 as a registry of feature definition and datasource definition.

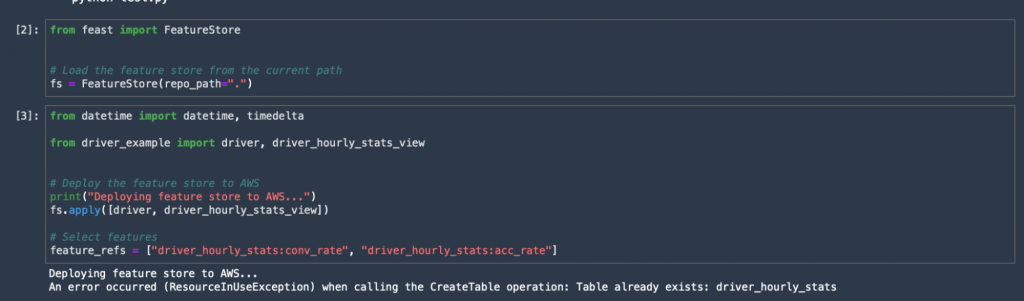

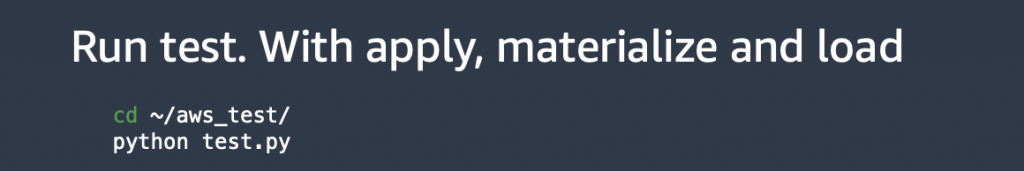

We have created baseline Feast objects. Now we call apply, to apply that config to AWS, and to provision the information we need.

Feast AWS provider has created the infrastructure required for DynamoDB tables. Feast creates a registry, just to store the registered information for Feast in the S3 folder under the hood.

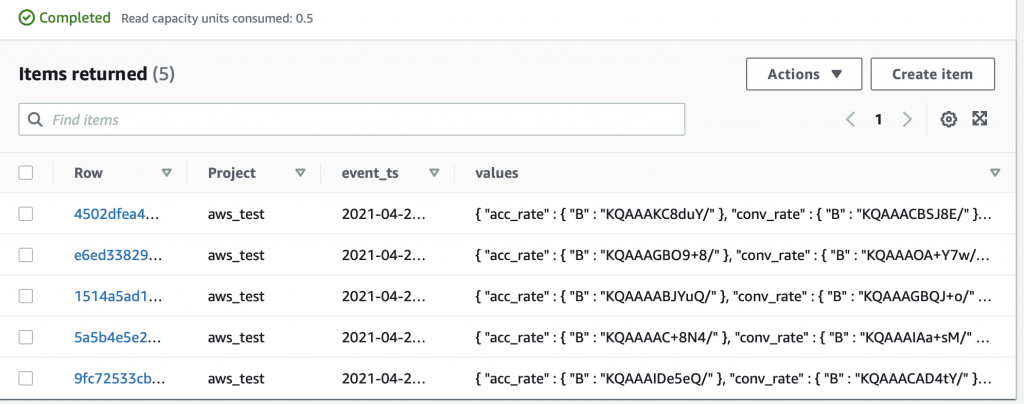

Next, we will call materialize_incremental, which aims to synchronize and materialize the offline store with an online view. The offline store is used as a locally stored parquet file.

We call materialize_incremental and check the results.

You see the materialized view in DynamoDB.

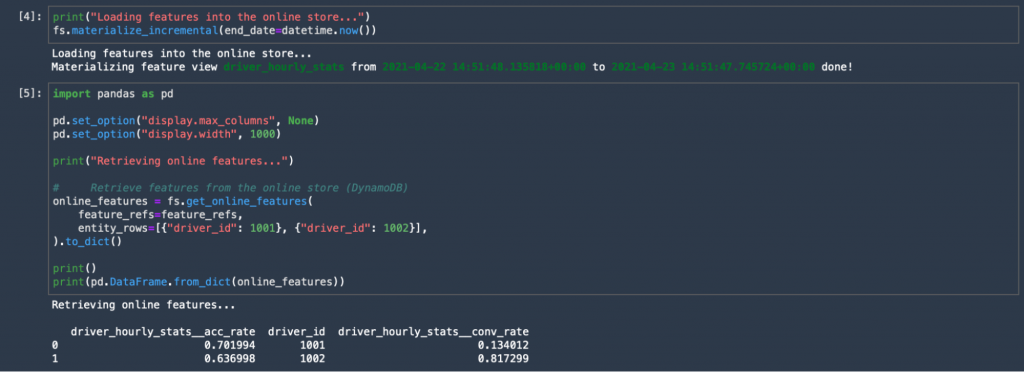

We now have our online features that we can query through the same API and get online features using Feast SDK.

This is a simple demo, but it opens up a lot of possibilities for future work and collaboration.

Next Steps

We encourage you to explore the roadmap for Feast 0.10 on AWS. Kindly check out the following resources to learn more:

- Amazon S3 Registry

- Amazon DynamoDB Integration

- Amazon Redshift Data Source

- AWS Glue Source with Amazon Athena Integration

- Amazon S3 Hudi Table

Have some ideas about what we can do better? Feel free to contribute to the project on GitHub. We look forward to your feedback!

And finally, if you are looking to bring ML Feature Store to your organization, reach out to Provectus. We will be happy to assist your team with integrating a feature store and supporting your AI solutions.