Maximizing the Performance of Investment Products with Generative AI

Venerable simplifies access to company information with a GenAI Intelligent Search Assistant, streamlining back-office and customer-centric operations, to maximize the value of customer portfolios

Venerable is a leader in the insurance industry, specializing in the acquisition and management of legacy variable annuity portfolios. The company focuses on maximizing the performance, value, and stability of these investment products through strategic asset management. With backing from experienced investors, Venerable leverages innovative technologies and financial expertise to ensure reliable and beneficial outcomes for all of their customers and stakeholders.

Challenge

Venerable recognized an opportunity to leverage generative AI, to enable employees to access information siloed in the internal knowledge base. By using a GenAI-powered intelligent search interface, they can quickly find accurate and contextually relevant answers about company policies and procedures, covering both back-office and customer-centric operations. GenAI’s accuracy, speed, and usability enable employees to prioritize value-adding tasks such as managing customer investment portfolios, without having to spend time navigating various internal wiki pages, folders, and documents. GenAI is expected to enhance efficiency and productivity, unlocking the benefits of economies of scale, while helping Venerable improve customer satisfaction.

Solution

Provectus recognizes the importance for businesses to start their GenAI journeys with use cases that deliver short-term value while offering long-term scalability. Accordingly, Venerable’s project started with discovery sessions with their stakeholders and employees, to identify the problem, expectations, and technical constraints. They selected intelligent search as the most impactful use case, and an evaluation dataset of exemplary prompts and answers was collected. Various foundation models, available on Amazon Bedrock, were evaluated against the dataset, to select the best model for the project. The delivered GenAI solution was validated by the client’s employees, and their feedback on real-world performance was used to improve the quality of answers.

Outcome

In an industry where every minute matters, Venerable saw a clear opportunity to use generative AI to streamline their back-office and customer-centric operations by making it easier for employees to access the company’s siloed information. By implementing a GenAI-powered intelligent search assistant, they were able to improve the speed of internal knowledge base searches by 90%. This improvement allowed employees to spend more time on tasks that improve the performance and value of customer portfolios. The result was greater employee efficiency, improved customer satisfaction, and stronger financial performance. This GenAI project marks the client’s initial foray into the field, setting the stage for scaling additional GenAI use cases organization-wide.

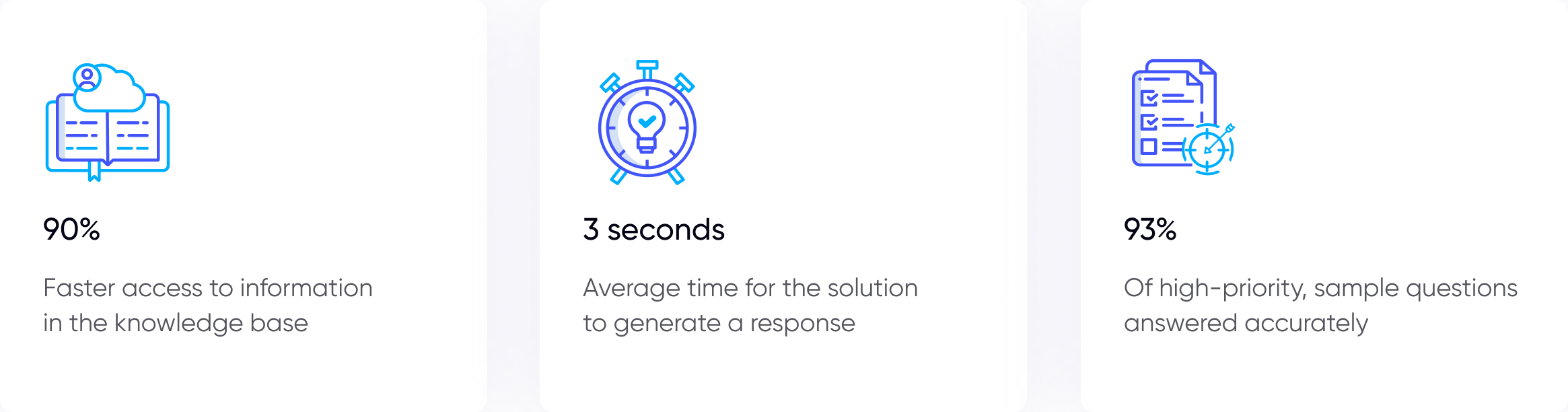

90%

Faster access to information in the knowledge base

3 secs.

Average time for the solution to generate a response

93%

Of high-priority sample questions answered accurately

Streamlining Access to Siloed Company Information Using GenAI’s Advanced Search Capabilities

Venerable is a well-established leader in the insurance industry, specializing in the acquisition and management of legacy variable annuity portfolios.

Annuities are financial products that offer payments at regular intervals, typically used to ensure a steady cash flow for retired recipients.

Managing annuities is generally straightforward, but variable annuities present a unique challenge (especially when managed on a secondary market). They allow the owner to allocate funds across various investment options, such as stocks, bonds, and mutual funds, with varying payouts based on the performance of those investments. While offering the potential for higher returns, they also carry a much higher risk. It is critically important for Venerable to manage their customers’ portfolios in ways that minimize risks and maximize payouts, while also streamlining operations to capitalize on annuity margins.

In the insurance industry, providing employees with tools for working quickly and accurately, with precision, is crucial. Such tools can:

- Reduce the risks associated with management of variable annuities

- Improve the performance, productivity, and satisfaction of employees

- Boost the performance and value of customer investment products over time

- Unlock economies of scale to enhance operational performance organization-wide, allowing business to achieve higher profit margins on managed products

Recognizing this, the leaders of Venerable were eager to explore the potential of generative AI technology in their back-office (HR & Admin) and customer-centric departments (Customer Support, Document Management, Analytics, etc.). Provectus, an Al-first Consultancy with AWS Generative Al Competency, was chosen as a valued partner in this venture.

Given the numerous potential applications of generative AI, it was essential for both Provectus and Venerable to launch several discovery sessions to identify the most impactful GenAI use case. After careful consideration, it was decided to utilize generative AI to streamline knowledge base searches for employees. The envisioned GenAI solution — a user-friendly conversational interface — would streamline access to company information, scattered across intranet wiki pages, cloud folders, and documents in different formats. Following discussions between the client’s leaders and Provectus, it was decided that the initial focus of the GenAI solution would be to search across documents such as HR policies, the employee handbook, and engineering Standard Operating Procedures (SOPs).

For the client, reducing time-to-insight represents a significant opportunity to streamline back-office and customer-centric operations, and achieve higher profit margins.

With the solution, employees would be able to simply type in their questions about company policies, procedures, or expectations, and the GenAI assistant would almost instantly deliver accurate, contextually relevant answers. This eliminates time spent navigating conventional intranet searches, accessing various documents, and scrolling for information. Time saved from searching would directly enhance Venerable’s business operations, leading to improved productivity and increased value of customers’ variable annuities.

With these advantages in mind, Venerable partnered with Provectus to develop an initial prototype of the GenAI assistant for intelligent search. This effort also served as an experiment with generative AI, boosting the client’s confidence and paving the way to explore additional applications in the future.

Designing and Building a GenAI-powered Assistant for Intelligent Search on Amazon Bedrock

At Provectus, we recognize the importance for businesses to start their AI adoption journey with use cases that deliver measurable value in the short term, while also serving as a foundation for full-scale AI transformation.

With this in mind, our collaboration with Venerable began with a comprehensive discovery phase. During this phase, Provectus’ AI experts worked with the client’s business partners to identify, define, and prioritize the most impactful GenAI use cases. We examined the client’s strategic initiatives, looked into the pain points of their organizational process, assessed Data and AI readiness, and evaluated IT processes, technologies, and skill sets.

As a result of the discovery phase, Venerable opted to begin its generative AI journey with an intelligent search use case that Provectus would develop during the pilot phase. The client’s employees would evaluate each iteration of the solution prototype, providing feedback on its real-world use.

During the pilot phase, the Provectus team worked iteratively, progressing step by step:

- Compiled an evaluation dataset consisting of high-priority questions provided by Venerable

- Gathered exemplary responses to these questions, to help assess the performance of various foundation models

- Evaluated all Large Language Models (LLMs) available through Amazon Bedrock using the selected questions

- Identified the top five models based on quantitative metrics (e.g. faithfulness), human evaluation, and cost-efficiency

- Collected feedback from select employees to better understand the specifics of model performance

- Chose Amazon Titan (Embeddings) for search and Anthropic’s Claude 3 Haiku for response generation, based on the quantitative metrics and employee feedback

- Fine-tuned model parameters to further optimize performance, and to improve accuracy and relevancy in handling employee questions

- Tested a production-ready GenAI solution with approximately 100 questions provided by Venerable

In simple terms, the GenAI solution is a conversational user interface that allows employees to query the knowledge base by asking questions in natural language. The solution can handle documents in PDF, DOCX, PPTX, and other formats that are automatically uploaded to an Amazon S3 bucket for easy access. Employees can rate the answers they receive in the solution’s UI, to continuously improve the model’s output.

Technology-wise, the GenAI solution for intelligent search was developed using a technology stack that includes Python, LLamaIndex, FastAPI, and Swagger. It employs Amazon Titan (Embeddings) and Anthropic’s Claude 3 Haiku, both available through Amazon Bedrock, as its core LLMs. These models were selected for their performance, cost-effectiveness, and high ratings from Venerable’s employees, with a focus on scalable, high-quality text generation.

The quality of the solution’s output was assessed using such evaluation metrics as ROUGE and BERTScore. Additionally, Relevancy and Faithfulness metrics were calculated based on a known ground truth dataset.

The solution is designed for scale, to produce high-quality text responses, while integrating seamlessly with AWS services. It is well-documented for AWS deployment and includes Docker support for local development, making it easily adaptable to any future use cases that the client might choose to experiment with.

Unleashing Employee Productivity and Efficiency: Generative AI as a Driver of Business Value

In the insurance industry, regulatory compliance, operational efficiency, and customer service quality determine a business’s success or failure. For companies like Venerable, efficient employee time and resource management is critical, and even a simple improvement in back-end and customer-centric processes can make a significant difference — for both the client’s business and its customers.

The success of Venerable’s project on the Generative AI-powered Assistant for Intelligent Search is a good example of this.

Making it easy for employees to access siloed company knowledge, ranging from basic documents like HR policies and employee handbooks to engineering SOPs, can ensure that both customer-centric and back-office operations run smoothly and efficiently.

Quick access to siloed company knowledge enables Venerable to:

- Enhance employee performance and productivity by reducing the time spent searching for information

- Ensure that all actions are compliant with industry standards, as well as with internal policies and protocols

- Prioritize higher-value tasks such as communicating with customers, processing annuity documents, and managing investment portfolios

The GenAI solution for intelligent search, developed by Provectus, has delivered the accuracy, speed, and usability Venerable needs to streamline operations, improve customer satisfaction, and accelerate business growth.

Before adopting the GenAI solution, only about 50% of the client’s employees were satisfied with their experience searching for information in the knowledge base, and only 5% could find the information they needed on the first search.

The GenAI solution for intelligent search has dramatically changed their experience. It accurately answers 100% of the top ten selected questions and 93% of sample questions. On average, it takes the solution three seconds to generate an accurate and contextually relevant response (a 90% reduction in time needed to find the required information).

With Amazon Titan (Embeddings) and Anthropic’s Claude 3 Haiku at its core, the GenAI solution is designed to be increasingly performant and cost-effective. For example, the performance of Claude 3 Haiku is 95% better than that of Claude 3 Sonnet, which was also considered as a core LLM. Haiku is also 12x less costly than Sonnet, and more than 40x more cost-efficient than GPT-4 Turbo.

Overall, compared to their previous search experience that required multiple attempts to find the right answer, Venerable’s employees can now quickly find answers and links to a source within seconds. Time saved in information searches, while seemingly miniscule, adds up, enabling Venerable to serve more clients, capitalize on annuity margins, and maximize the performance and value of their customer investment portfolios over the long term. The solution’s success helped to build confidence in generative AI, laying the groundwork for future use cases to be built on AWS, with Provectus as a valued partner.

Provectus continues to work with Venerable to enhance and scale the solution. Next steps include improving information crawling, routing, and indexing; integrating the solution with Microsoft Teams; and operationalizing the solution using MLOps best practices.

Moving Forward

- Learn more about GenAI’s potential from business and technology perspectives in The CxO Guide to Generative AI: Threats and Opportunities

- Assess the readiness of your organization to adopt GenAI solutions with Generative AI Readiness Assessment with Provectus

- Develop high-value generative AI use cases with “Generative AI by Provectus” workshop

Contact us

Looking to explore the solution?

.png)