August 20, 2025

19 min read

Differential Privacy for LLM Pipelines: Lessons from Anthropic’s Clio

Authors:

Pavel Borobov, Staff Solutions Architect at Provectus

Alberto Sossa, ML Solutions Architect at Provectus

Introduction

The enterprise adoption of Large Language Models (LLMs) has accelerated rapidly, transforming domains like customer service, content creation, software development, and data analysis. However, this rapid proliferation has outpaced privacy safeguards, widening the gap between AI capabilities and data protection compliance. A question emerges: Can we harness LLMs’ full potential without compromising user privacy?

Despite the urgent need for innovative, practical privacy solutions, many organizations still rely on outdated anonymization techniques easily circumvented by modern re-identification or membership inference attacks. LLMs trained on sensitive data can inadvertently memorize and leak personally identifiable information (PII), raising user risks and regulatory exposure for enterprises.

Differential Privacy (DP) offers a mathematically rigorous framework to constrain individual-level information leakage.

However, applying DP techniques at the scale and complexity of LLMs has proven challenging – both computationally and architecturally.

Anthropic’s new system, Clio, presents a compelling case study: a production-scale, privacy-preserving analytics system built on millions of user interactions with Anthropic’s Claude. Clio demonstrates that large-scale LLM analytics can preserve user privacy through pragmatic engineering, balancing utility, transparency, and empirical safeguards.

What This Article Covers

- Mastering DP in LLM Pipelines: Key definitions, attack vectors, and implementation trade-offs

- Overcoming Engineering Constraints: Privacy budget accounting, training performance, and inference-time mitigation

- Diving Deep into Clio’s Architecture: A four-layer statistical privacy model for production-grade analytics

- Building Your Own Solution: Practical guidance, code patterns, infrastructure strategies, and when to apply DP vs. statistical safeguards

Differential Privacy Fundamentals for LLM Pipelines

What Is LLM Differential Privacy?

Differential Privacy (DP) provides mathematical guarantees that an algorithm’s output remains statistically indistinguishable whether any data record is included or excluded from the training dataset. Unlike heuristic privacy measures, DP quantifies how much information about individuals may perforate through model parameters or inference outputs.

The formal definition centers on the principle of indistinguishability, synonymous with semantic security in cryptography.

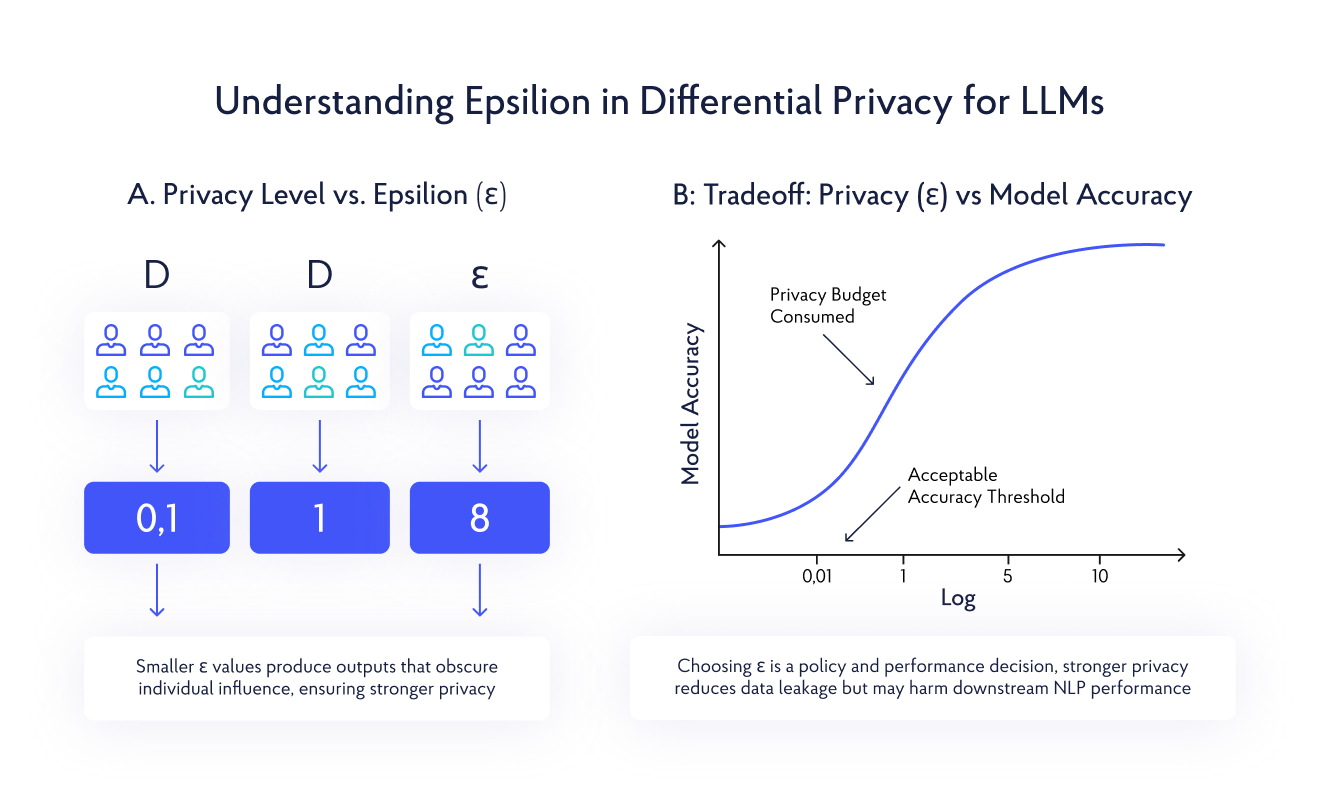

A differentially private algorithm produces statistically similar outputs for any two parallel datasets D and D′ that differ by exactly one record. This similarity is controlled by the privacy parameter ε (epsilon), which bounds worst-case information leakage about any individual. A smaller ε implies stronger privacy guarantees.

Mathematically, an algorithm M satisfies ε-differential privacy if for all possible outputs S ⊆ Range(M) and all neighboring datasets D, D′:

Pr[M(D) ∈ S] ≤ e^ε · Pr[M(D′) ∈ S]

In LLM training, this creates concrete constraints. For example, when Meta researchers trained RoBERTa with ε = 8, they guaranteed that including or excluding any individual’s data would change the probability of any specific model output by at most e^8 ≈ 2,981. While this sounds large, it represents bounded leakage compared to models trained without differential privacy, where individual influence can be unbounded.

In enterprise LLM pipelines, differential privacy addresses several critical threats:

- Training Data Extraction: Prevents reconstruction of individual training examples from model weights or gradients

- Membership Inference Attacks: Obscures whether specific proprietary documents were present in the training corpus

- Model Inversion Attacks: Defends against attackers attempting to reverse-engineer training data from output logits or checkpoints

The epsilon parameter is a privacy budget – a finite resource determining protection strength and computational overhead. Effectively managing this budget is important for building production-grade LLMs that simultaneously satisfy compliance, privacy, and performance goals.

How Does DP Work in Practice?

Differential privacy is not a one-off technique but rather a set of enforceable guarantees integrated directly into the machine learning pipeline. For enterprise-scale LLMs, this translates into architectural interventions across training, inference, and privacy accounting.

1. Training with DP-SGD

The foundation of private model training is Differentially Private Stochastic Gradient Descent (DP-SGD). During training, each example’s gradient is individually clipped to limit its influence, then aggregated and noised using Gaussian noise. This prevents any one datapoint from disproportionately shaping the model.

DP-SGD is production-ready today via libraries like Opacus and TensorFlow Privacy. For example, fine-tuning a Hugging Face transformer using Opacus:

from opacus import PrivacyEngine

privacy_engine = PrivacyEngine()

model, optimizer, train_loader = privacy_engine.make_private_with_epsilon(

module=model,

optimizer=optimizer,

data_loader=train_loader,

epochs=epochs,

target_epsilon=3.0,

target_delta=1e-5,

max_grad_norm=1.0,

)However, DP-SGD imposes significant costs. Training overheads of 3-5× are typical, especially in large transformer models. To mitigate this, privacy-first systems often apply DP strategically to fine-tuned layers, freeze large portions of the base model, or use parameter-efficient adapters like LoRA.

2. Differentially Private Inference

Inference-time DP plays an important role in preventing sensitive data leakage from model outputs. Even models trained with DP-SGD can memorize and display sensitive content under specific prompting techniques. Modern systems employ multiple defense methods:

- Logit Perturbation: Adds calibrated noise (typically Laplace or Gaussian) directly to logits before the softmax layer, reducing the risk of outputting memorized strings verbatim

- Temperature Scaling: Increases entropy in output distribution by adjusting softmax temperature, making the model less confident in any single token

- Top-K or Nucleus Sampling: Restricts tokens considered for sampling to prevent exposure of rare, possibly memorized tokens

While these techniques do not offer formal ε-bounded guarantees individually, they are essential for real-time systems where queries can be adversarial.

3. Tracking Privacy Loss

Privacy accounting transforms differential privacy from a theoretical concept into an engineering constraint. Every DP operation consumes a finite privacy budget, measured through ε (epsilon) for worst-case leakage and δ (delta) for failure probability.

Modern implementations use Rényi Differential Privacy (RDP) rather than naive composition, providing tighter bounds across multiple training steps. Production ecosystems implement real-time budget tracking through:

- Opacus integrates RDP accounting directly into PyTorch training loops

- TensorFlow Privacy supports both RDP and legacy Moments Accountant

- Google’s DP library handles streaming analytics with pluggable accounting mechanisms

Enterprise deployments treat privacy budgets like finite, measurable, and exhaustible computational resources. Without rigorous accounting, mathematical guarantees become meaningless promises.

The Challenges Of Integrating DP in LLM Pipelines

Implementing differential privacy in production language models highlights notable conflicts between theoretical expectations and practical integration constraints. Unlike traditional machine learning, where privacy measures yield predictable outcomes, large language models present complex challenges that intensify with scale and architecture.

Training LLMs with DP incurs considerable computational costs, increasing by 3-5 times due to per-example gradient processes. Memory requirements escalate as individual gradients must be stored, making management feasible for smaller models like BERT-base but impractical for larger systems like GPT-3 without advanced techniques like ghost clipping. The fragmentation of the privacy budget across distributed nodes necessitates intricate accounting methods that traditional infrastructures find challenging to support.

Furthermore, the privacy-performance trade-off restricts production deployments. Systems with ε values of 3 often experience a 5-10% decline in performance compared to non-private models, while ε = 8 systems nearly match non-private performance but offer weaker privacy. Due to lower epsilon values, stronger privacy remains untested mainly in large-scale models.

Additionally, emergent behaviors in LLMs, including few-shot learning, lie outside gradient-based privacy analysis, introducing black-box risks in advanced deployments. Adversarial prompting exacerbates the challenge as attackers devise techniques to bypass existing defenses.

Going Beyond Differential Privacy: Anthropic’s Clio Approach

When Mathematical Guarantees Break Down

Enterprise LLM deployments reveal a complex landscape where formal differential privacy shows promising advancements but encounters considerable deployment challenges. The paper Large Language Models Can Be Strong Differentially Private Learners represents a significant milestone in private machine learning, illustrating that ε = three systems can achieve near-baseline performance through three essential innovations: leveraging large pretrained models, implementing new hyperparameters for noisy gradients, and aligning fine-tuning objectives with pretraining strategies.

These developments challenge the belief that differential privacy falters at scale due to dimension-dependent noise. Li et al.’s ghost clipping technique reduces memory usage by 22× for embedding layers, while their hyperparameter findings – large batch sizes with high learning rates – contradict traditional views on private training. This research confirms that effective implementation of formal differential privacy can succeed in LLM training.

However, a core architectural mismatch persists: textual analytics conflict with the bounded sensitivity assumptions required by differential privacy. While DP-SGD protects training data with mathematical noise, it struggles with the exploratory nature of modern LLM applications. As Anthropic researchers note, “formal guarantees such as differential privacy” are challenging for systems producing complex textual outputs. The limitations extend beyond computational costs; they stem from differential privacy’s foundational assumptions regarding query spaces and output formats.

Conversational AI systems inherently breach these assumptions. User queries are limitless and adversarial, while model outputs are rich and noise-sensitive. Analytics demand discovery of patterns in unstructured text, not fixed statistical computations. This divide between formal privacy guarantees and practical analytics needs spurred Anthropic to create Clio – not to dismiss differential privacy but to evolve it, addressing the analytic challenges that mathematical methods cannot resolve.

Clio’s Statistical Privacy – A Much-Required Paradigm Shift

When developing Clio, Anthropic prefers a statistical and empirically validated approach rather than relying solely on DP. This shift acknowledges a significant trade-off in privacy-preserving systems. While formal differential privacy ensures mathematical certainty regarding maximum privacy loss, it often undermines system utility. Statistical privacy techniques forgo strict guarantees in favor of practical effectiveness, employing imperfect privacy layers that collectively reduce risks below detection thresholds in curated audits.

Clio’s architecture combines aggregation thresholds with AI-driven privacy filtering to achieve privacy protection similar to differential privacy, especially for exploratory analytics on conversational data. Instead of adding calibrated noise to meet ε-δ constraints, Clio uses AI models to identify and remove personally identifiable information throughout its pipeline. This approach aligns with differential privacy principles while overcoming its limitations. While differential privacy often adds Gaussian noise during training, Clio applies privacy-preserving transformations to the outputs in inference and analytics. These systems complement each other, addressing different stages of the large language model lifecycle.

Four-Layer Privacy Architecture

Clio’s privacy protection operates through cascading layers that progressively eliminate private information while preserving analytical value. This defense-in-depth strategy mirrors security engineering best practices, recognizing that no privacy mechanism can provide perfect protection in isolation.

Layer 1: Conversation Summarization

Anthropic’s Claude 3 Haiku models extract high-level topics while explicitly omitting personally identifiable information – reducing private content from ~10% to 1.5%.

Unlike differential privacy’s uniform noise addition, AI-powered filtering adapts to context, preserving semantic meaning while removing identifying details. The temperature parameter (0.2) ensures consistent, low-entropy outputs that stabilize summarization and reduce variability that could reintroduce sensitive edge cases.

Layer 2: Aggregation Thresholds

Minimum cluster sizes enforce k-anonymity at the group level, requiring sufficient unique accounts and conversations per cluster. This addresses a limitation of individual-focused differential privacy by protecting against group privacy violations where small communities could reveal sensitive organizational or demographic information.

Layer 3: Cluster Summary Filtering

A second AI processing stage regenerates cluster descriptions using privacy-aware prompting with contrastive prompting from nearby clusters to ensure distinctiveness. This dual-AI approach creates redundant privacy protection, mirroring differential privacy’s composition properties where multiple operations strengthen overall protection.

Layer 4: Automated Privacy Auditing

The final layer employs a specialized Claude 3.5 Sonnet model that evaluates privacy on a 5-point scale, achieving 98% accuracy on curated test sets. This automated auditing provides quantitative measurement similar to differential privacy’s accounting mechanisms, enabling production deployment with measurable privacy guarantees.

Production Performance Metrics

Clio’s real-world deployment proves that statistical privacy approaches can deliver large-scale analytics while ensuring robust privacy protection across all layers. The system demonstrates measurable privacy guarantees by processing over 1 million conversations without the computational overhead of isolated formal differential privacy.

Where Clio’s Privacy Approach Falls Short

Despite its robust multi-layered privacy design, Clio faces several operational limitations that must be addressed for enterprise-grade deployments. These limitations do not undermine its core architecture but instead emphasize the importance of strategic reinforcement and continuous monitoring.

1. Correlated Failures Across Privacy Layers

Clio’s architecture extensively utilizes Claude’s AI models for summarization, clustering, filtering, and auditing. While layering enhances fault tolerance, it simultaneously increases the risk of correlated failures. Specifically, if one layer’s AI model overlooks sensitive content, subsequent models – trained on similar datasets and algorithms – may similarly fail to identify this sensitive information. For instance, health-related euphemisms bypassing initial summarization could subsequently remain undetected through clustering and filtering stages.

Although Anthropic mitigates this vulnerability through periodic model updates, the inherent risk posed by shared model architectures and training data persists.

To better protect privacy, companies should use different safety measures. For example, they can apply pattern-matching rules, like regular expressions, to find personally identifiable information (PII). They can also use dictionary-based redaction after semantic filtering. These extra steps create varied failure modes. This way, if one model fails, it is less likely to harm all privacy layers.

2. Group Privacy Violations

While Clio enforces k-anonymity at the individual level using aggregation thresholds, it does not fully address group privacy risks. Even without naming individuals, summarizing clusters representing narrow communities (e.g., employees from a small vendor or participants in niche medical programs) can reveal sensitive group characteristics.

Anthropic’s approach uses contrastive prompting and cluster generalization, but this assumes group diversity sufficiently obfuscates specificity. In reality, small, tightly defined populations remain vulnerable. Enterprise teams must integrate group-sensitive taxonomies, maintain internal flag lists for known high-risk communities, and trigger review pipelines for narrow clusters. Privacy validation must go beyond cardinality to assess semantic and demographic exposure at the group level.

3. Risks of False Positive Enforcement

Clio clusters are optimized for insight generation, not enforcement. Yet in production environments, the temptation to use clustering for moderation, throttling, or access control is real, and risky. Clustering models are probabilistic, meaning misclassification is inevitable. When downstream actions occur without human validation, false positives can impact innocent users, eroding trust and triggering compliance liabilities.

Anthropic explicitly cautions against using Clio for enforcement. Enterprises should build gating logic and escalation paths where AI-generated outputs merely inform decisions. For example, clusters exceeding sensitivity thresholds should trigger human-in-the-loop review, not direct enforcement.

Confidence scoring, keyword triggers, and audit trails must all contribute to a robust governance layer that separates signal from action.

4. User Trust Erosion

Even anonymized data analysis can feel invasive, especially in sensitive areas like personal banking, government ID services, and healthcare. When users know their conversations are being summarized, grouped, and analyzed, it can make them uncomfortable or less engaged, regardless of the actual risk.

Anthropic tackles this issue with transparency reports and access controls, but building trust goes beyond compliance – it is about the user experience.

Enterprises should create a dedicated privacy UX layer. This includes opt-in messaging, real-time previews of captured data, and interactive privacy notices that help users understand and control their privacy. Internal privacy practitioners should work with product owners, legal teams, and ethical entities with no conflict or interest to review trust quarterly, align messaging, take pulse checks, and address concerns before they become major issues.

5. Privacy Drift Over Time (Emergent Risk)

Beyond the privacy drawbacks stated in Anthropic’s paper on Clio, the emergent risk problem remains unexplored.

Clio does not currently address privacy drift – the gradual decline in filter performance as new terms, entities, or cultural artifacts emerge. Without proactive mechanisms to detect and adapt to novel identifiers (e.g., new diseases, slang, or acronyms), statistical filters will lag behind adversarial inputs or new sensitive vocabulary.

Anthropic relies on periodic model upgrades, but in fast-moving environments, this may be insufficient.

Enterprises should embed privacy regression testing into CI/CD workflows. Maintain an evolving suite of edge-case examples and test AI layers against them before each deployment. This is a key difference from formal DP: statistical filtering must evolve with the data environment.

A representative Python-based privacy regression testing approach is as follows:

# Privacy Regression Test Pseudocode

edge_cases = load_edge_case_samples("privacy_regression.json")

model_output = clio_filter_model.predict(edge_cases)

for i, (sample, output) in enumerate(zip(edge_cases, model_output)):

if contains_sensitive_terms(output):

raise PrivacyRegressionError(f"Privacy drift detected at index {i}: {sample}")Incorporating such automated regression testing ensures that statistical filtering mechanisms consistently evolve to address unprecedented threats, terminologies, and linguistic contexts, maintaining rigorous privacy protections in dynamic operational environments.

Building Privacy-Preserving LLM Pipelines: A Practical Guide

The intersection of formal DP research and Anthropic’s statistical innovations equips enterprise teams with a robust toolkit for implementing privacy-preserving LLM systems. Achieving success requires strategic decisions between mathematical guarantees and statistical validation based on operational needs.

Integration Essentials

API Architecture for Privacy Efficiency

Design request batching patterns that conserve privacy budgets while enhancing throughput. Aggregate similar Claude API calls into batched requests, enabling cost optimization and privacy amplification through subsampling effects shown in Google’s DP library.

# Example: Privacy-optimized request batching

batch_requests = group_by_privacy_sensitivity(user_queries)

for batch in batch_requests:

response = claude_api.batch_process(

queries=batch.queries,

privacy_level=batch.sensitivity_tier,

epsilon_allocation=batch.budget_allocation

)Tiered Privacy Budget Management

Implement a hierarchical epsilon allocation across system components.

Allocate strict budgets (ε ≤ 1) for compliance-sensitive analytics, while permitting higher allocations (ε = 3-8) for exploratory insights. This architecture, validated in Google’s Chrome telemetry and Clio’s implementation, ensures mathematical DP guarantees and practical statistical privacy approaches.

Create separate privacy contexts for different data sensitivity levels. High-risk PII processing maintains ε ≤ 1 with full DP-SGD, while aggregate analytics can operate at ε = 8 or use statistical filtering. This tiering prevents over-engineering privacy for low-risk workloads while ensuring compliance for sensitive operations.

Dual-Metric Monitoring

Establish comprehensive observability that tracks utility degradation and privacy consumption. Monitor cluster formation quality, aiming for Clio’s 94% accuracy benchmark, while tracking real-time epsilon exhaustion rates for formal DP components.

Implement live drift detection for adversarial prompting patterns, as model leakage may develop faster than epsilon tracking alone captures. Dashboard visibility into mathematical and statistical metrics prevents privacy budget overruns and utility collapse.

# Privacy monitoring dashboard components

class PrivacyMonitor:

def __init__(self):

self.epsilon_consumed = 0

self.delta_consumed = 0

self.utility_metrics = {}

self.adversarial_patterns = BloomFilter()

def track_query(self, query, response, privacy_params):

# Track epsilon consumption

self.epsilon_consumed += privacy_params.epsilon_cost

# Monitor utility degradation

self.utility_metrics['accuracy'].append(

measure_response_quality(response)

)

# Detect adversarial patterns

if self.is_adversarial(query):

self.adversarial_patterns.add(query_hash)

self.trigger_enhanced_filtering()Infrastructure Optimization

Deploy AWS Lambda functions for aggregation pipelines and Amazon DynamoDB for state management, creating scalable privacy-preserving analytics infrastructure.

This serverless architecture aligns with Apple’s practical DP implementations, supporting computationally intensive DP-SGD training workflows and real-time statistical filtering systems like Clio’s four-layer approach.

Hybrid pipelines combining training-time formal DP with inference-time statistical privacy. Train base models with DP-SGD at ε = 8, then apply Clio-style filtering for production inference. This balances mathematical guarantees during training with practical performance at serving time.

Performance Targets

Enterprise deployments require a sub-50ms privacy overhead for user-facing requests. This is achievable through optimized formal differential privacy noise injection and effective AI-driven statistical filtering methods.

With a scale of 100K+ requests per hour, careful resource allocation is key. Formal differential privacy typically incurs a 3-5× computational overhead during training, but inference costs remain minimal. Statistical privacy systems such as Clio necessitate continuous AI model inference without penalties during training.

Maintaining utility retention above 90% is crucial for production viability. This can be accomplished through Li et al.’s advanced differential privacy training, which integrates pretrained model fine-tuning with optimized hyperparameters at ε = 8, or through Clio’s statistical validation, which achieves 94% accuracy.

Strategic Implementation Framework

1. When to Deploy Formal Differential Privacy

Regulatory compliance scenarios requiring mathematical proof of privacy protection necessitate formal DP approaches. GDPR Article 25’s “privacy by design” and HIPAA’s technical safeguards favor systems with quantifiable privacy guarantees. Financial services handling PCI DSS data also benefit from epsilon-delta bounds, demonstrating measurable privacy protection.

High-sensitivity data contexts, such as healthcare records or financial transactions, require the certainty that formal DP provides. Li et al.’s breakthrough protocols achieving ε = 3 prove viable in scenarios where data exposure carries significant legal or reputational risks.

2. When Statistical Privacy Suffices

Exploratory analytics on conversational data exemplify Clio’s strengths: deriving insights from unstructured text where formal DP’s bounded sensitivity assumptions falter. For systems analyzing patterns across support conversations, Clio’s aggregation model offers adequate protection at minimal cost.

Real-time conversational AI systems demanding sub-second response times gain from statistical privacy’s computational efficiency. Marketing analytics, user experience research, and product improvement projects achieve protection through learned privacy filters rather than mathematical noise.

Textual content generation workloads, where noise injection undermines output usability, necessitate statistical methods. Blog analysis, customer feedback processing, and content moderation retain utility through AI-powered filtering, though this creates dependencies requiring continuous validation.

3. Decision Framework: A Condensed Analysis

System architecture mapping guides privacy mechanism selection:

- Data layer: Individual record analysis → formal DP; aggregate pattern analysis → statistical privacy

- Application layer: Predetermined queries → formal DP; exploratory analytics → statistical approaches

- Inference layer: Structured outputs → DP noise injection feasible; rich text → statistical filtering required

- Utility loss tolerance: Formal DP is viable if 5-10% performance degradation is acceptable for compliance benefits. Statistical privacy approaches are more effective for businesses demanding >95% utility retention.

- Compliance requirements mapping: Jurisdictions demanding mathematical proof (GDPR high-risk processing, healthcare regulations) reject statistical validation. Document regulatory requirements before architectural decisions to avoid costly rework.

Common Implementation Pitfalls

1. Over-Engineering Initial Deployments

Teams often create custom DP implementations instead of established libraries. Begin with Opacus for formal DP or adapt Clio’s open methodology for statistical privacy. A major bank spent 6 months building a custom DP-SGD only to discover Opacus handled their use case with superior performance.

2. Ignoring Utility Metrics

Privacy-focused development without business impact tracking leads to unusable systems. A financial services firm found their ε = 1 fraud detection system decreased accuracy below random chance – mathematically private but operationally ineffective. Always establish baseline utility metrics before implementing privacy mechanisms.

3. Insufficient Observability

Teams deploy privacy mechanisms without monitoring epsilon consumption or cluster formation quality. Establish comprehensive dashboards before deployment to simultaneously track privacy budget exhaustion and performance indicators. One startup discovered its privacy budget exhausted after 3 days of production traffic, rendering subsequent queries non-private.

4. Poor Stakeholder Communication

Technical teams often fail to articulate privacy-utility tradeoffs to business stakeholders. Present concrete examples: “With ε = 3, customer sentiment analysis accuracy drops from 95% to 91%, but we gain GDPR compliance certification.” This facilitates informed architectural decisions and avoids post-deployment surprises.

Conclusion

The evolution from formal differential privacy to Clio’s statistical approach reveals a fundamental truth: production-scale privacy protection demands pragmatic engineering over theoretical finesse. Clio succeeds by acknowledging that conversational AI requires context-aware privacy mechanisms – not uniform mathematical noise that destroys semantic meaning.

The path forward for technical leaders navigating this landscape is clear: embrace hybrid architectures. Deploy formal DP where regulatory compliance demands mathematical guarantees (training pipelines, structured data), while leveraging statistical methods like Clio for exploratory analytics and real-time inference.

Start with established frameworks: Opacus for DP-SGD training, and adapt Clio’s four-layer architecture for analytics. Monitor dual metrics religiously – privacy budget consumption alongside utility retention. Most critically, recognize that privacy engineering is iterative. Begin with high-epsilon formal DP (ε=8) or basic statistical filtering, then tighten controls as you master the privacy-utility tradeoff.

The enterprises that will thrive in the AI era are those starting to build privacy into the foundation of their generative AI solutions today. As LLMs become critical infrastructure, privacy-preserving architectures transform from a competitive advantage to an existential requirement.

Ready to implement differential privacy in your LLM pipelines? Build your secure GenAI solutions with Provectus and Anthropic – Start on AWS Marketplace!

.png)