October 11, 2023

9 min read

Amazon Bedrock Is Now Generally Available. Here Is Our Perspective

Authors:

Pavel Borobov, Staff Solutions Architect

Rinat Gareev, Staff Solutions Architect

Stepan Pushkarev, CTO & Co-founder

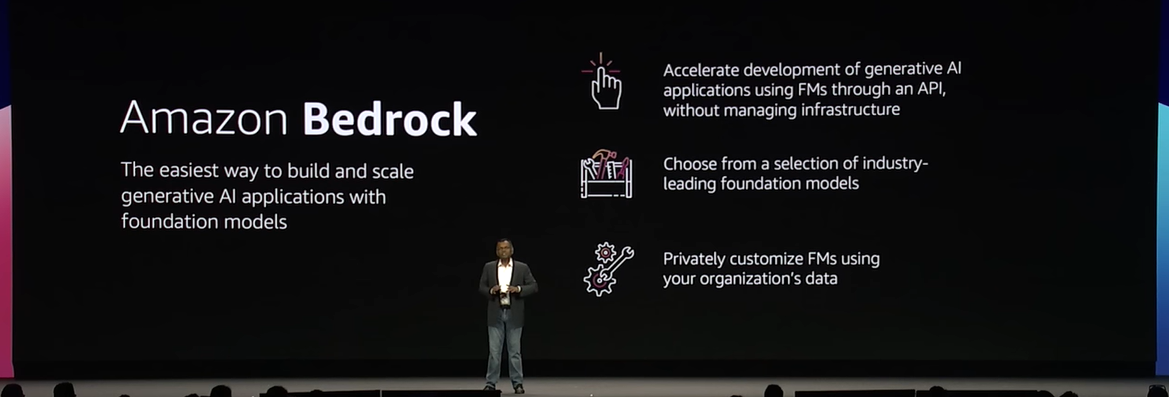

Amazon Web Services (AWS) has just rolled out the general availability of Amazon Bedrock, a fully managed service that is expected to redefine the landscape of generative AI. In this overview, you will learn about Bedrock’s new features and find out how they make it easy to build and scale generative AI applications without the hassle of infrastructure management.

If you are an engineer looking to leverage foundation models for a wide range of AI use cases or an IT executive working on the generative AI strategy for the enterprise, this is a service you cannot afford to miss.

Before we delve into the nuts and bolts of Amazon Bedrock to explore its potential for leveraging generative AI on AWS, we’d like to share some strategic considerations:

- Amazon Bedrock provides a democratized selection of natively integrated foundation models from leading AI companies as first class citizens. These vendors include AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon itself.

- Full and native integration with the AWS ecosystem enables developers to build production-ready applications from the ground up.

- Bedrock comes with well-architected, enterprise-ready security features, all of which are a part of a comprehensive framework in the AWS ecosystem.

- AWS’s strategic advantage is its ability to progressively reduce costs. By leveraging AWS Inferentia chips designed for high performance, it is projected that AWS will offer the industry’s lowest cost per inference for Gen AI applications.

- Because pricing versus quality becomes a critical factor in choosing an LLM vendor, Inferentia’s cost-efficiency, coupled with AWS’s pay-as-you-go and provisioned throughput model, enhances the competitive edge of AWS and Amazon Bedrock.

Application Integrations

Customized Chat Experience with Actionable Events

- Overview: Amazon Bedrock Agents enable developers to configure agents that can respond to user input and an organization’s data. These agents orchestrate interactions between various components, including foundation models, data sources, software applications, and user conversations.

- Automated Actions: Agents automatically call APIs to take actions based on user input. When users interact with these agents, they can identify actionable events within the conversation and trigger actions accordingly. Behind the scenes, these actions can be routed to AWS Lambda functions, which can execute actions against other AWS services and databases.

- Use Cases: Developers can create agents for specific use cases, such as processing insurance claims, making travel reservations, or answering questions related to various tasks.

Knowledge Bases and Retrieval-Augmented Generation (RAG)

- Knowledge Bases: Bedrock Agents can leverage various knowledge bases to enhance their capabilities. Knowledge bases are used to store private data in vector databases.

- RAG Workflow: By using knowledge bases, developers can enable a Retrieval-Augmented Generation (RAG) workflow. RAG combines private data with an LLM to generate context-aware responses to user queries.

- Automated RAG: Setting up and implementing RAG steps, such as data pre-processing and runtime execution, are automated by the knowledge base service. This automation streamlines the process of building intelligent conversational agents.

Integration with Vector Databases

- Vector Databases: Knowledge bases can now be integrated with vector databases. This integration allows developers to load private data into vector indexes, which are used to generate embeddings for RAG.

- Flexible Data Handling: Developers can read data from Amazon S3 buckets, split it into manageable chunks, generate vector embeddings, and store them in vector indexes. This process facilitates the retrieval of relevant documents during user interactions.

Amazon Bedrock Monitoring

Model Invocation Logging

- Logging of Invocation Data: The logging of invocation data, including both model input data and model output data. This data is valuable for analyzing how the model is used and how it responds to different inputs.

- Multiple Destination Options: Users can configure where the log data will be published. Two supported destinations are Amazon CloudWatch Logs and Amazon S3. This flexibility allows users to choose the storage solution that best suits their needs.

Use Cases for Different Teams

- ML/LLM Ops: The ML and LLM operations teams can use the logged data to analyze model invocations, track model performance, and identify failures or anomalies.

- DevOps: DevOps teams can operationalize model performance monitoring and set up alerting mechanisms using Amazon CloudWatch. This allows them to proactively respond to any issues that may arise during model invocations.

Event-Driven Architecture

- Overview: Amazon EventBridge is used to set up a full-scale, event-driven architecture on top of Amazon Bedrock customization jobs.

- Event Publication: Amazon Bedrock publishes events via Amazon EventBridge whenever there is a change in the state of a model customization job.

- State Change Events: These events represent state changes in the customization jobs, providing information about the state of which job has changed and the current state of the job.

Automated Response to State Changes

- Rule-Based Automation: EventBridge enables users to define rules and targets for specific event patterns. Rules are configured to match certain criteria in incoming events.

- Automated Actions: When a state change event from Amazon Bedrock matches a predefined rule, EventBridge triggers automated actions, such as invoking Lambda functions, publishing to Amazon SQS, or sending notifications via Amazon SNS.

- Handling Event Data: Users can design the rules to respond to state changes with specific actions based on the contents of the event, allowing for a highly customized and automated response.

Real-Time Event Delivery

- Near Real-Time: Events from Amazon Bedrock are delivered to EventBridge in near real-time. Users receive timely notifications and can respond promptly to state changes in model customization jobs.

- Eliminating API Rate Limit Issues: By using EventBridge to receive job status updates, users do not need to invoke the GetModelCustomizationJob API repeatedly. This eliminates concerns related to API rate limits, updates, and the potential need for additional compute resources.

Cost-Effective Event Processing

- No Cost for Receiving Events: There is no cost associated with receiving AWS events from Amazon EventBridge. This cost-effective approach encourages the adoption of event-driven architectures.

The integration of Amazon EventBridge with Amazon Bedrock facilitates the creation of event-driven architectures for ML/LLM Ops environments. It enables real-time monitoring of state changes in model customization jobs and automates responses based on predefined rules. This approach enhances the agility and efficiency of ML/LLM Ops workflows, enabling rapid responses to changes in ML model customization jobs and reducing the reliance on manual API calls.

Effective Performance Monitoring

- Overview: The integration allows for the effective monitoring of Amazon Bedrock’s performance and health through Amazon CloudWatch.

- Real-time Metrics: CloudWatch collects raw data and processes it into readable, near real-time metrics. This provides immediate insights into the performance of managed model providers or customized models.

Key Runtime Metrics

- Invocations: Count of requests to the InvokeModel or InvokeModelWithResponseStream API operations

- InvocationLatency: Latency of the invocations in milliseconds

- InvocationClientErrors: Count of invocations resulting in client-side errors

- InvocationServerErrors: Count of invocations resulting in AWS server-side errors

- InputTokenCount: Count of tokens in text input

- OutputImageCount: Count of output images

Amazon Bedrock Security

Data Encryption

- Data at Rest: Bedrock employs encryption to protect data at rest. Data stored on disk or in storage systems is encrypted, ensuring that even if physical storage is compromised, the data remains secure.

- Data in Transit: Data transferred between services and components within Bedrock is encrypted using TLS 1.2. This encryption ensures that data is protected while in transit between different parts of the system.

Key Management

- AWS Key Management Service (KMS): Bedrock allows users to manage encryption keys using AWS KMS. They can choose to use AWS managed keys or customer-managed keys for encryption.

- Customer Managed Keys: Users can opt to encrypt model artifacts from customization jobs using customer-managed keys. These keys are created, owned, and managed by users within their AWS accounts.

Model Customization Inputs and Outputs

- Encryption of Input Documents: Input documents, such as training and validation data, can be encrypted at rest in Amazon S3 buckets using Amazon S3 SSE-S3 server-side encryption. This provides an additional layer of security for sensitive training data.

- Encryption of Metrics: Upon completion of a model customization job, job metrics are stored in Amazon S3. Users can encrypt these metrics using the same SSE-S3 server-side encryption.

Protection of Jobs Using a VPC

- VPC Configuration: Users are recommended to create a Virtual Private Cloud (VPC) to control access to data and job metrics. This VPC helps secure data from external access, and enables monitoring of network traffic using VPC Flow Logs.

- VPC Endpoint: When creating a VPC with no internet access, a VPC endpoint should be established to allow customization jobs to access S3 buckets containing data and model artifacts. This ensures secure data transfer without exposure to the public internet.

Identity and Access Management

- Security Groups: Security groups are used to establish rules for controlling Amazon Bedrock’s access to VPC resources. This helps control the network traffic and access permissions within the VPC.

Interface VPC Endpoints (AWS PrivateLink)

- Private Connection: AWS PrivateLink is used to create a private connection between the user’s VPC and Amazon Bedrock. This connection allows access to Amazon Bedrock without the need for an internet gateway, NAT device, VPN connection, or the AWS Direct Connect connection.

- Secure Access: Instances in the VPC can access Amazon Bedrock without requiring public IP addresses. This enhances the security of the environment.

Conclusion

Amazon Bedrock has the potential to become a game-changing service for developers, ML/LLM Ops, and DevOps teams alike. While its agents are still in preview, Bedrock can already help developers to create customized chat experiences. Its compatibility with multiple vector databases allows for the creation of knowledge bases, enhancing RAG workflows.

Bedrock’s provisioned throughput ensures predictable scaling, while its integration with EventBridge lays the foundation for full-scale, event-driven architectures. The service also comes with rich operational tooling, accessible via CloudWatch, that caters to both ML/LLM Ops for model invocation analysis and DevOps for performance monitoring and alerting.

On the security front, Bedrock offers VPC configurations, KMS encryption, Interface VPC Endpoints via AWS PrivateLink, and more. Every aspect of data protection is addressed.

Amazon Bedrock offers a comprehensive, secure, and customizable service at an affordable price. Bedrock promises to simplify development of LLM applications maintaining privacy and security.

The outlook is just so good!