October 24, 2023

13 min read

The Democratization of Generative AI on AWS

Author:

Provectus, AI-first consultancy and solutions provider.

Generative AI is more than just a trend; it is becoming a business imperative. Organizations across the board, from large enterprises to small businesses, are rushing to find out how generative AI can offer them a competitive edge, enhance customer value, and drive success.

The urgency to adopt generative AI is backed by compelling data and research. A 2023 study from the University of Pennsylvania reveals that approximately 80% of the workforce in the US could see at least 10% of their tasks affected by large language models (LLMs), a type of foundation models (FMs) that provide machine learning (ML) algorithms for generative AI. LLMs could expedite about 15% of all work tasks without compromising quality. This figure jumps to 47-56% when LLM-based user-friendly software and tooling are incorporated into operations, end-to-end.

On a global scale, as reported by McKinsey, generative AI can potentially add between $2.6 trillion and $4.4 trillion annually across 63 specific use cases. It could amplify the overall impact of AI by 15-40 percent. These estimates could double if generative AI is integrated into existing software.

In practical terms, companies that embrace generative AI early (in some shape or form) stand to gain a significant edge, while late adopters will face considerable challenges trying to catch up. The advantages of early adoption are simply too substantial to ignore.

This leads to the inevitable conclusion that, as the demand for generative AI surges, it becomes crucial for organizations to prepare their platforms and ecosystems for widespread, almost ubiquitous adoption of AI/ML. Among the key players spearheading the democratization effort is Amazon Web Services (AWS).

AWS Background and First Steps

AWS has always been at the forefront of AI innovation. With an extensive suite of AI/ML services and supporting cloud infrastructure, AWS facilitates the entire lifecycle of AI development — from data collection and preparation to model training and evaluation, to deployment and support. It enables businesses to radically transform their operations, making AI not just a theoretical concept but a practical solution for real-world challenges.

When it comes to generative AI, AWS is strongly positioned, too. Unlike its competitors, AWS offers businesses an open and flexible environment to use foundation models, rather than locking them into a strict corporate ecosystem.

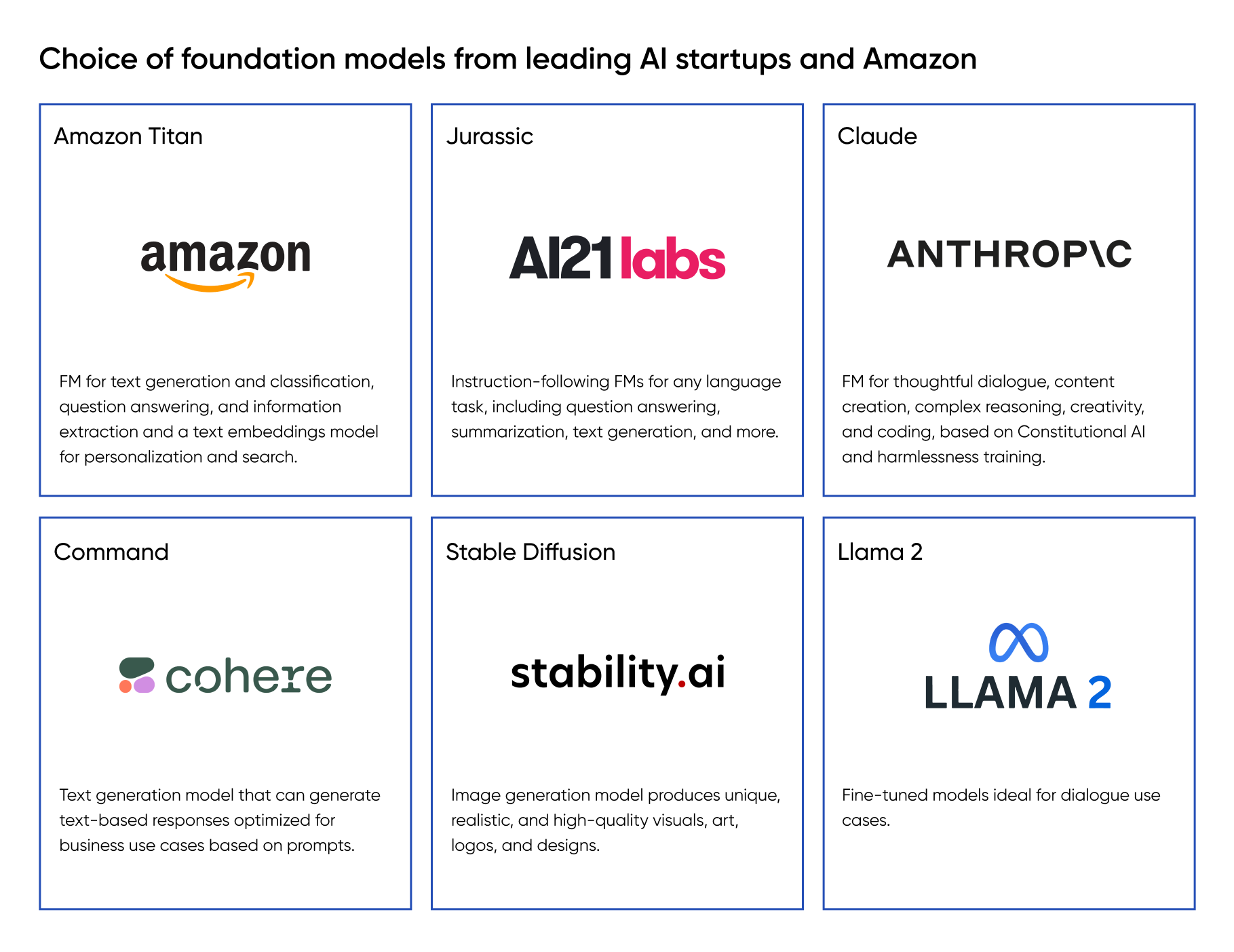

As of September 2023, AWS collaborates with Hugging Face, making it easy for engineers to deploy and fine-tune pre-trained natural language processing (NLP) models on Amazon SageMaker. Amazon Bedrock, a fully managed foundation model service, already supports Cohere’s flagship Command model for text generation, and Embed model for text understanding and translation tasks. The latest foundation models from Anthropic, AI21 Labs, and Stability AI are also available on Bedrock. By collaborating with multiple FM providers, AWS strives to reinforce its AI and cloud expertise with the agility and flexibility of open source.

This strategy makes a great difference, because generative AI is not just a collection of specific algorithms. It involves extra-large models that use neural networks to identify patterns and structures in existing data, to generate new and original content — a task that is inherently challenging and resource-intensive. Open-source providers will enhance the AWS Generative AI offering by adding data, use cases, and specific applications of FMs, enabling businesses to rapidly build and scale generative AI with fewer constraints.

More specifics of this open-source-friendly strategy have been shared by Matt Wood, Vice President of Product at AWS, in one of his recent interviews. According to Matt, AWS will provide “an AI model for everything” through a combination of:

- Unmatched availability of foundation models. AWS offers a diverse range of models and makes them easy to deploy. Whether organizations opt for Amazon Bedrock’s pre-curated models and API or delve into the extensive offerings in Amazon SageMaker Model Hub, they can get started in minutes. Bedrock’s selection of models will continue to expand.

- Focus on diverse operating models. AWS caters to the needs of various businesses, whether they require a simple API, extensive control over the model lifecycle, or a blend of both depending on their use case. From the user-friendly interface of Bedrock to the advanced capabilities of SageMaker, AWS has a specific solution at hand.

- Custom data utilization. Enterprises can use their unique data sets to fine-tune, retrain, or pre-train foundation models on AWS, which results in highly differentiated AI/ML solutions. This is already evident in several industry- and business-specific models built on AWS.

These unique benefits, combined with AWS’s selection of infrastructure choices, enable AWS to offer businesses multiple entry points and pathways, to start using generative AI.

Unlocking the Democratization of Generative AI: The Latest Announcements and Updates

Even before the generative AI hype, AWS had a rich legacy in the field. Services such as Amazon Alexa, Amazon Textract, Amazon Transcribe, Amazon Comprehend, and Amazon QuickSight Q were revolutionary, and they now serve as essential building blocks for creating the datasets to train foundation models.

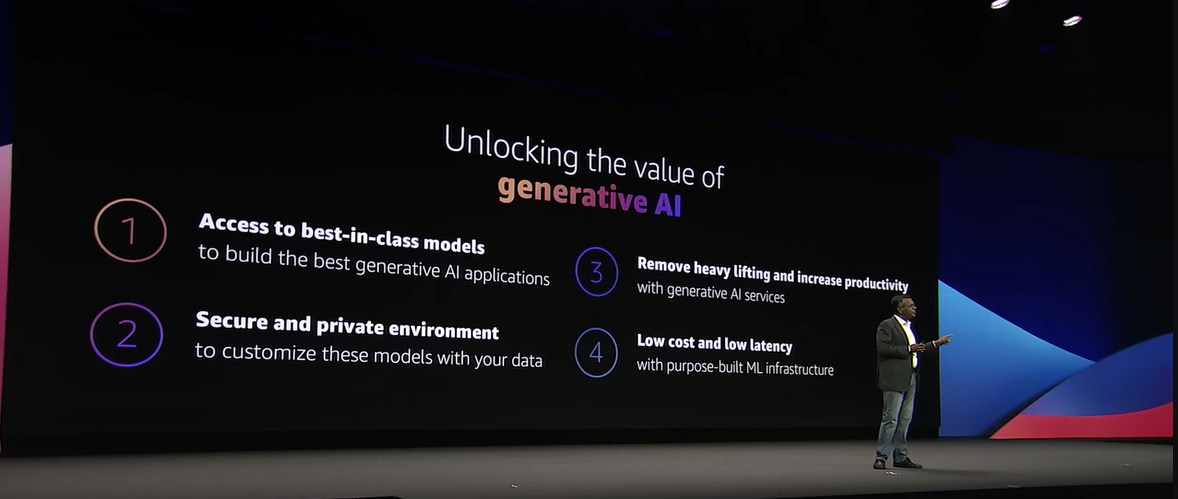

As soon as generative AI emerged as the next big thing, AWS responded with a series of strategic announcements, some of which were delivered by Swami Sivasubramanian, VP for Data and Machine Learning Services, at the AWS Summit in New York.

First, Swami emphasized that AWS intends to make generative AI accessible to every business. By offering a range of highly customizable and scalable solutions, AWS will democratize the playing field, enabling both small startups and large enterprises to harness the transformative power of generative AI.

Second, he reaffirmed that AWS will pursue a holistic approach to generative AI, encompassing cutting-edge algorithms, robust data governance, and ethical guidelines. This ensures that businesses can deploy generative AI solutions that are technologically advanced, ethically sound, and compliant with data privacy regulations.

These signify a long-term strategy of AWS to remain the leader of AI innovation, with a focus on generative AI, foundation models, and FM-powered services. The multitude of new services and service updates announced both during and after the summit underscore this commitment.

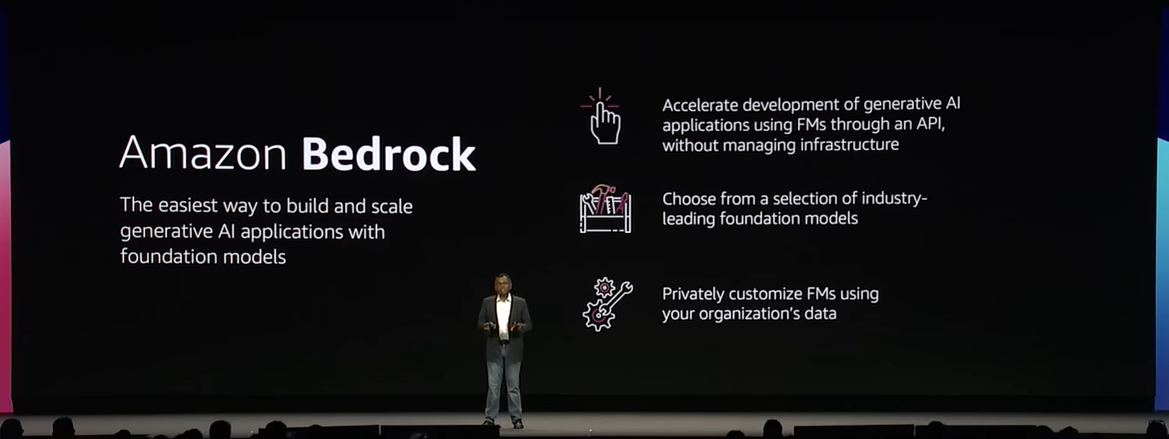

Amazon Bedrock

Amazon Bedrock is a fully managed service that makes foundation models from AWS and leading AI startups easily available through an API. Designed to democratize generative AI for business, the service offers seamless access to Amazon Titan’s FMs, Cohere’s Command and Embed models, AI21 Labs’ Jurassic-2, Anthropic’s Claude, Stability AI’s text-to-image models, and Meta’s Llama 2.

Utilizing a serverless architecture, Bedrock simplifies the integration of these models, all within the familiar AWS ecosystem (e.g. Amazon SageMaker suite), helping businesses scale their generative AI applications with ease. It also offers specialized features such as multilingual support, advanced text summarization, and photorealistic imaging capabilities.

From the generative AI use case standpoint, Amazon Bedrock supports text generation, virtual assistants, text summarization, image generation, and search.

What sets Bedrock apart is its focus on model customization. With minimal input — just a few labeled examples of company data stored in Amazon S3 — Bedrock can fine-tune models to generate content that meets specific business objectives. For example, e-commerce businesses can leverage Cohere’s flagship text-generation model, Command, to generate customized product descriptions, to capture consumer attention and drive conversions.

Note: As a managed service, Amazon Bedrock duplicates a foundation model selected by the customer, ensuring that their enterprise data remains within AWS. This prevents model providers from using customer data to train their own FMs, a critical consideration for data privacy, security, and compliance.

Amazon Titan

Amazon Titan hosts a family of FMs pre-trained by AWS on large datasets. Titan comes with two distinct LLMs designed to revolutionize text-related processes in a business environment.

- Titan Text is a generative LLM for such tasks as text summarization, text generation, and information extraction. This model can automate labor-intensive text tasks, to free up valuable time and resources of white-collar employees. For instance, service providers can leverage Titan Text to automate the creation of customer quotes and other documentation, reducing manual effort.

- Titan Embeddings is a generative LLM that specializes in converting text inputs into numerical representations known as embeddings. Embeddings enable machines to comprehend relationships across such forms of content as text, images, audio, and video in a ML-friendly format, making it possible to generate contextually relevant responses. Empowered with the ability to compare embeddings, businesses can refine user experience and improve the accuracy of content recommendations and search.

AWS diligently follows the principles of responsible AI in its Titan LLMs. The models come with built-in features for detecting and removing harmful content, rejecting inappropriate user inputs, and filtering outputs based on content guidelines. Businesses can deploy Titan’s capabilities with confidence, knowing that ethical considerations are integral to its technology.

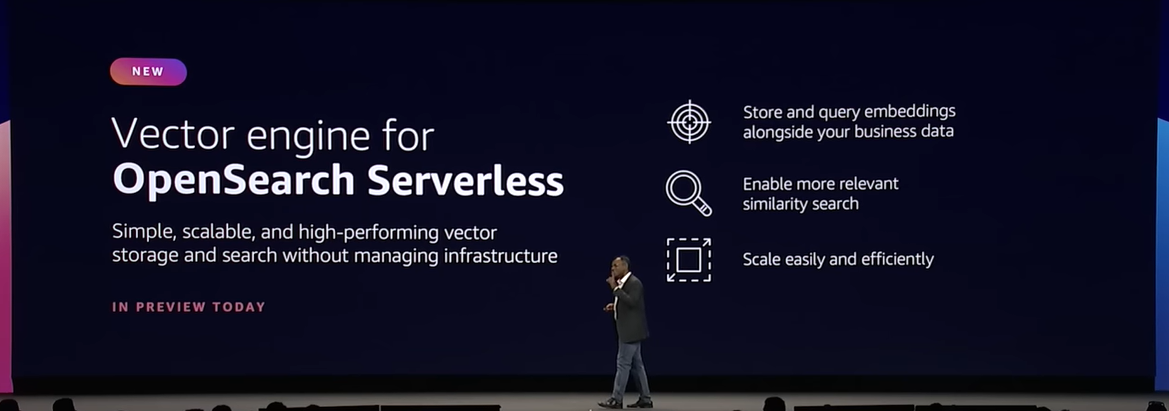

Vector Engine Support for Amazon OpenSearch Serverless

Amazon Titan is not the only service that can democratize generative AI-powered, smart search for business. Now, vector engines can also be used in Amazon OpenSearch Serverless.

Amazon OpenSearch Serverless is a serverless option in Amazon OpenSearch Service. With a new vector engine available in the service, engineers get a simple, scalable, and high-performing solution for building AI/ML-augmented search experiences and generative AI applications. They do not have to manage a separate vector database infrastructure, which reduces engineering complexity and operational overhead.

Generative Business Intelligence in Amazon QuickSight

Amazon QuickSight is a cloud-native, serverless, business intelligence (BI) service with native AI/ML integrations. It has long been a go-to solution for organizations looking for capabilities to easily query and display their data as valuable insights for informed decision-making.

Now, QuickSight comes with integrated generative AI capabilities, called “Generative BI,” available through Amazon Bedrock. This integration is expected to minimize data silos within organizations, foster easier collaboration, and expedite data-driven decisions.

One of the standout features of this integration is the use of natural language prompts. Analysts can author or fine-tune dashboards using everyday language, making the platform more accessible than ever. Business users can swiftly share insights, complete with compelling visuals, all within a matter of seconds.

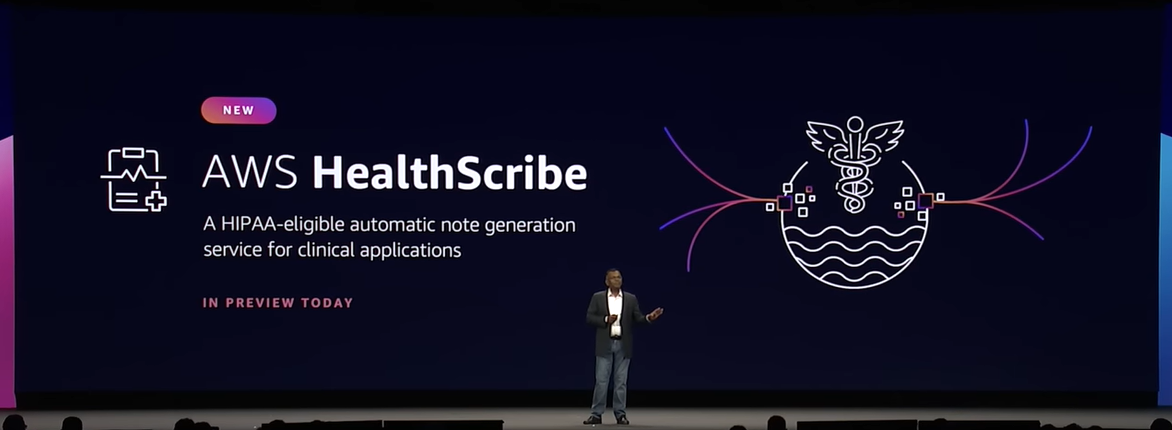

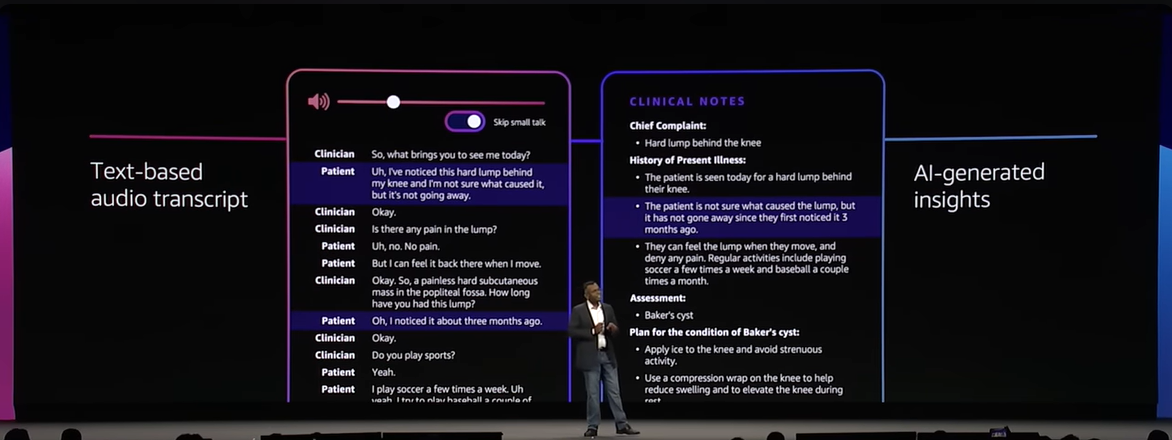

AWS HealthScribe

AWS HealthScribe is a HIPAA-eligible service for building clinical applications that automatically generate clinical notes by analyzing patient-clinician conversations. Now, the service will leverage generative AI to unlock new dimensions of paperwork efficiency and enable clinicians to focus more on patient care.

As announced, AWS HealthScribe will combine its speech recognition technology with Amazon Bedrock’s generative AI capabilities, to accelerate and facilitate note-taking for medical professionals.

The service not only automates the transcription process, but also generates easy-to-review clinical notes. What sets it apart is its robust security and privacy features, specifically designed to safeguard sensitive patient data.

New Amazon EC2 Instances

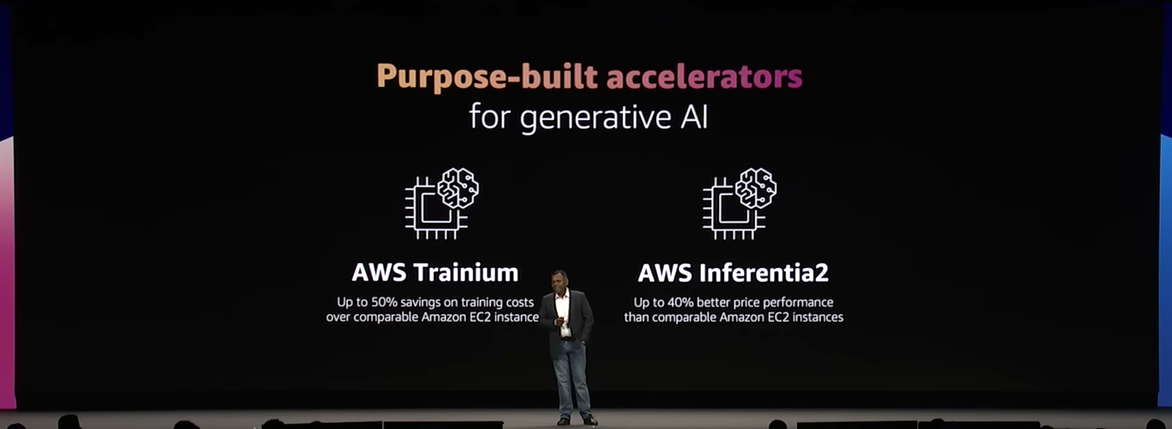

The democratization of generative AI extends beyond merely offering engineer- and business-friendly services; it also entails supplying the raw compute power and infrastructure necessary for the development and deployment of generative AI applications in the real world. For that purpose, Amazon has expanded its Amazon EC2 instance offerings to include Trn1n and Inf2 instances, designed to provide cost-effective cloud infrastructure for generative AI applications.

Trn1n instances, powered by AWS Trainium, offer up to 50% savings on training costs and are built for scalability, making them ideal for ML workloads. By contrast, Inf2 instances are optimized for large-scale generative AI applications and deliver higher throughput and lower latency, enhancing inference price performance by up to 40%.

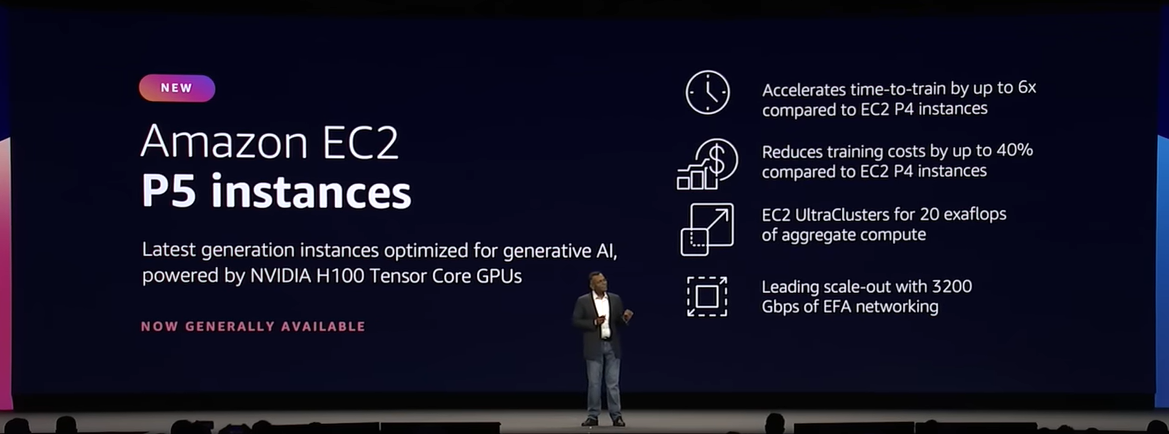

Building on this momentum, Amazon has launched Amazon EC2 P5 instances, which harness the power of NVIDIA H100 Tensor Core GPUs. These GPUs are optimized for training LLMs and generative AI applications, supporting such use cases as question answering, code generation, video and image generation, speech recognition, and more.

AWS is the first cloud provider to offer NVIDIA’s H100 GPUs in production, setting a new standard for training and running inference on increasingly complex LLMs and compute-intensive generative AI applications. With access to H100 GPUs, businesses can train their own LLMs and FMs faster than ever, accelerating time-to-market for AI solutions.

Amazon CodeWhisperer

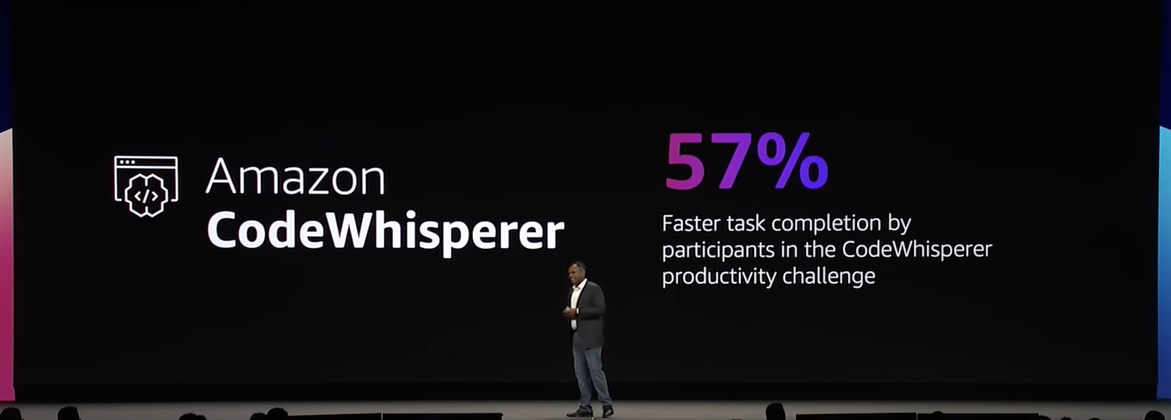

Speaking of engineering-friendly generative AI applications, AWS has released another expansion of Amazon CodeWhisperer, an AI-powered coding companion.

Utilizing generative AI, CodeWhisperer offers real-time code suggestions based on natural language comments and the developer’s prior code within their Integrated Development Environment (IDE). The tool is versatile, supporting multiple programming languages and integrating with popular IDEs like VS Code and IntelliJ IDEA. Its training on both publicly available code and Amazon’s proprietary codebase ensures that suggestions are fast and accurate.

In addition to its code generation capabilities, CodeWhisperer incorporates security scanning features that detect vulnerabilities and offer remediation suggestions. Designed with ethical considerations in mind, the service can filter out biased or unfair suggestions to promote responsible code generation.

Going Beyond Cloud Services: AWS Infrastructure On-Premises

AWS offers businesses a comprehensive suite of cloud generative AI services. Companies gain access to rigorously tested foundation models, eliminating the need to develop in-house solutions or rely on third parties. AWS alleviates the infrastructure burden, allowing businesses to focus on innovation rather than technical setup. It enables customization of generative AI models to meet specific business needs, while prioritizing data security and compliance.

But AWS offers more than cloud-only services. It also provides on-premises “hybrid” infrastructure solutions, which are critically important when considering the difference between custom and fine-tuned foundation models, and their respective use cases.

Custom models provide nearly unlimited flexibility, but they are expensive to build and use. Their development and deployment require top-tier talent and specialized know-how, making them a complex option for businesses looking to integrate AI capabilities. On the other hand, fine-tuned models offer a budget-friendly and quick-to-deploy solution. But these models offer limited flexibility as they are dependent on a third-party core model. Using fine-tuned models can also raise data privacy concerns, as data is processed through an external model.

AWS combines the best of both worlds, mitigating most drawbacks of these two FM types. It equips businesses with specialized tools to bring generative AI development closer to their premises, elevating data security and privacy to an unprecedented level.

AWS Outposts is one of such tools. It is a comprehensive suite of fully managed solutions that bring AWS infrastructure and services directly to businesses, on-premises, ensuring a seamless hybrid experience. Available in various form factors — from 1U and 2U servers to 42U racks and multi-rack deployments — AWS Outposts makes it easy to run native AWS services locally. The infrastructure of AWS Outposts can help reduce time, resources, operational risks, and maintenance downtime.

>

For instance, using AWS Outposts, applications requiring low-latency compute, such as near real-time and real-time generative AI applications, can be run locally in the most efficient manner, ensuring that users across the planet experience high-quality, interactive applications without lag.

Data residency is another critical concern. Businesses in such highly regulated industries as financial services, healthcare, and oil and gas can rely on AWS Outposts to maintain full control over where their data resides, making it easier to comply with local laws and regulations.

On top of that, AWS Outposts enables local data processing for such use cases as data lakes and ML model training, while offering the flexibility to move data to the cloud for long-term storage. This is particularly useful for businesses dealing with large, difficult-to-migrate data sets constrained by cost, size, or bandwidth.

In practice, this means that AWS covers generative AI development and utilization needs end-to-end, from hardware tools to infrastructure, to powerful engineering services, to specific use-case-based services and models.

AWS Legacy and the Future of Generative AI

AWS is a formidable player in the generative AI niche, offering an extensive suite of services that cater to both businesses and engineers. Its advanced infrastructure, bolstered by cutting-edge hardware such as NVIDIA H100 GPUs and AWS Trainium, provides an unparalleled foundation for AI/ML applications.

“By harnessing the generative AI capabilities provided by AWS, businesses can create a flywheel effect, to propel them ahead of competitors. Through automated generative AI-powered decision-support systems, businesses can efficiently address complex problems, investigate cyberattacks, and remediate vulnerabilities.”

From fine-tuned to custom foundation models, AWS ensures end-to-end coverage for the entire lifecycle of generative AI development and its real-world applications. This approach is a testament to AWS’s long standing legacy in open AI/ML innovation. AWS is committed to further democratizing these advanced capabilities, making them accessible to businesses of all sizes.

In a rapidly evolving landscape where AI, including generative AI, quickly becomes ubiquitous, AWS not only strives to level the playing field, but also empowers others to do the same. AWS is setting a new standard for what is achievable in the world of generative AI.

Looking for a deeper dive into generative AI? We invite you to explore our comprehensive resource, The CxO Guide to Generative AI: Threats and Opportunities. The guide explores generative AI for enterprises, covering opportunities, risks, and key considerations, to help business leaders drive generative AI transformations.